Building a Doorstep Info Station with Nerves, Raspberry Pi & ESP32-based E-Ink

When you think of entry-level projects for newcomers to the IoT realm, one of the first things that comes to mind is: build a weather station!

There's hundreds of tutorials explaining how to do this, including the book by A. Koutmos, B. A. Tate and F. Hunleth, authors of Nerves, so we'll not be trying to do yet another one. Instead, we'll show you how to build a custom information station powered by Phoenix Framework and running on whatever (though certain design choices will be made with Nerves and the Raspberry Pi in mind), feeding pages rendered as pictures to an ESP32-based E-Ink display.

We'll explain the architectural design choices, design the Phoenix server and build the client to run on the ESP32 microcontroller. Enjoy the read!

Shaping the idea & functional requirements

The premise of my story is quite simple: I've been seeking ways to declutter my digital life, and reducing my reliance on grabbing my phone for just about everything I need to look up is an important aspect. One of the activities in which I used to rely on my phone is looking up public transport timetables in my neighbourhood.

It takes me about 10 minutes to get from home to my local tram stop, and about 8 minutes to get to the nearest bus stop - and while I prepare myself to get out, I'd like to keep an eye on when I need to leave without having to reach for my phone. So I thought having the next few departures displayed at the doorstep would help a lot.

Then, when you think of other aspects such as checking the weather or perhaps viewing a to-do list, having them easily reachable as you're leaving home is also a nice idea, so the system should allow us to create several data display functionalities.

So the idea is pretty simple - we'd like to have a device that displays an information panel in the spirit of this, allowing to switch between a number of modules:

Non-functional requirements: devices & power

Here's where it gets interesting: I have several constraints that limit my options here; some of these are about my own programming preferences, abilities and productivity, others are related to power consumption and the specifics of my household.

Firstly, I don't have a smart home system and a dedicated power supply for appliances like this, which yields a number of limitations:

As we get to the requirements for the backend server, I should say that while I do have an educational background in C/C++ basics, I'm very rusty (no pun intended) and I'd like to come up with a solution that allows me to create a slim foundation to deploy on the ESP32 device so that I can spend most of the time coding Elixir on the backend.

So I'd like to build a codebase running on the frontend terminal that just loads the data via HTTP from the Phoenix server and displays it with as little processing as possible - I want to be able to define the frontend look and behaviour server-side so the frontend can just take this and render, using the limited resources it has.

Picking the hardware

As I said, I was looking for a low-power, battery-powered device that I could just charge from time to time. To enable us to create a flexibly controlled UI, I wanted a touchscreen which would preferably be an e-ink display - not only for battery life, but also because it's a good fit for the calmness I want to achieve with my digital stuff. And, of course, it needs to be connected to Wi-Fi.

So this all combined meant I would be looking mostly for devices based on the ESP32 microcontroller platform, which is reasonably powerful for the basic processing I need to do (doing HTTP requests, drawing UIs on a screen and controlling touch, etc.), and comes with built-in WiFi.

I explored several popular Lilygo devices like the T5 e-Paper, which are quite popular and come in a variety of packages and form factors, but then I've found an interesting range of devices from an European vendor: the Soldered Inkplate, which seems to be well-maintained and has a broad choice of devices of different sizes, coloured or monochrome, touch or non-touch e-ink displays, and included housings. I picked the Inkplate 4 TEMPERA version, since it looks cool as a little control panel next to my intercom, and has all the features I need, including a 4-inch e-ink touchscreen - which is backlit by the way, so I can use it in dim conditions as well. It's also got a lithium battery and a USB charging unit, and the vendor claims even years of battery life in deep sleep mode - my project will surely not be optimized to this extent, but it's still an impressive feat.

The device offers much more than that - Bluetooth, sensors for gestures, air quality, temperature and humidity, an accelerometer, gyroscope, buzzer, as well as GPIO. So it's a pretty flexible basis for building more ambitious projects than this, in a package that has a lot of things built in and lets you just sit down and code with Arduino IDE. Cool stuff!

So this is the client or terminal hardware, and while it can run a simple HTTP server, I'd like to be able to back it with a traditional relational DB and expand the implementation of my Phoenix-based application later to build an admin panel or anything else. I can essentially run it on anything, in the cloud or in my local network, but I thought we'd experiment with this setup:

We could just deploy this app to any OS running on the Pi, but let's make it even more interesting: what if we wanted to run it on bare-metal BEAM? This introduces additional limitations that we'll cover later, so take note of this - but, on the other hand, we'll explore how powerful an BEAM & Elixir-only environment can be. We'll get a bit out of the box with how we approach stuff, but still we'd like to build something elegant and well-organized.

Architectural idea: Rendering images, defining links

Building UIs for the Inkplate in itself is interesting and cleverly designed, as the vendor itself provides a UI designer that helps generate relevant C code. However, as my low-level programming abilities were rather rusty and I wanted to keep all UI generation on the backend to iterate more efficiently, I intended not to use it, because I'd have to either change the Inkplate application code frequently, or design an API of UI definition between the frontend and the backend and implement it on both sides accordingly.

The first thing I thought was: why not employ Phoenix to render the content in some way, and then do a low-effort activity on the Inkplate so it can just display the UI? Can we render HTML in some way that the Inkplate can act as a minimal web browser?

Well, parsing HTML in a basic way (e.g., for scraping purposes) is one thing, but transforming parsed HTML into a rendered page is a different story, and even the lightest engines such as

So the limitations of the ESP32 meant I would have to come up with a UI design API that would mean drawing the UI on the Inkplate driven by JSON data from the server, or just render the UI on the server as an image and send it to the Inkplate, which happens to happily render pictures from the web.

For serving HTML pages rendered into pictures from Phoenix I first thought about using the good old wkhtmltoimage tool, which would be fine if we deployed it to a Pi running a full OS on which we could just install the wkhtmltopdf package, but as we're talking about Nerves, this would be rather impractical: it needs to be installed in the system (so, in this case, baked into Nerves) and depends on the Qt library which makes things even worse.

This leaves us with rather slim chances of rendering HTML to images on the Phoenix + Nerves server, but we can still look for a similar solution that relies on an existing and well-defined markup: here comes SVG - Scalable Vector Graphics. SVG is an XML markup format usually employed for vector graphics for screens and printing alike, and, to a large extend, it meets our requirements as a well-defined layout format. And it occurs that there is an Elixir NIF for the Rust-written resvg library, allowing us to convert SVGs to PNGs that we can serve for displaying on the Inkplate.

So, to conclude this:

Can we do Elixir on an ESP32?

Writing the entire project in Elixir seemed like an interesting idea that I wouldn't even have considered a few years ago. Is it even a viable way to go if we're talking about microcontrollers?

I have good news and bad news. Have you watched Davide Bettio's ElixirConf EU 2025 keynote? 2025 is, according to Davide, Elixir's year of interoperabillity, and AtomVM is a huge part of that: it's a tiny implementation of the BEAM that can be ported to run in a variety of limited-resource environments, such as WebAssembly, or microcontrollers such as the STM32 or ESP32.

So thanks to the AtomVM I can, and I have, deployed Elixir code to my ESP32 board, and it runs just fine. Note, however, that the implemented standard library subset is minimal and system-dependent IO function behaviour is not guaranteed to match what we know from official BEAM, so don't expect a lot of libraries to work out of the box. I've had to go somewhat out of my way, for example, to fire and parse HTTP requests, which is normally dead simple with any Erlang or Elixir client on a PC or an ARM device.

I could overcome this with some additional tinkering, but there's also the Inkplate Arduino library written in C++ that I would need to use for controlling the device's peripherals so I don't have to rework this from scratch. I'd have to build AtomVM with the library as an IDF component, and include bindings to the functions I need (touchscreen control, etc.) in an Elixir NIF.

This would take a weekend of CMake wrangling and polishing the Elixir API, which is doable, but I'll leave it for sometime else and I stuck to a C++ implementation on the terminal for now. That said, I'll demonstrate how to build it in a way that we can more or less code once and forget - the server will drive all content generation and rendering autonomously.

ESP32 client overview

The client program, which will be deployed to Inkplate using the Arduino IDE, does this:

The client device source code in C++ can be found here, and we're not going to be covering this in much detail, as we'd like to focus on developing the Elixir server. What's important here is that the client is, at this point, a largely write-and-forget codebase.

We have what we wanted:

This is good to a large extent, as we're planning to render static weather and transit departures data refreshed once a certain interval. Note, however, that this means that the server can't render or process data from Inkplate's sensors and peripherals.

In our case, we currently only use the device's battery gauge, which we work around by overlaying battery status on top of the current page client-side. If we wanted the server to be able to render it in a custom way, or access the Inkplate's other peripherals (temperature, humidity, air quality, gestures, etc.) we'd have to somehow send it over the wire - via a request to a dedicated webhook, piggybacking it with other requests we do anyway, or using a WebSocket connection.

Power consumption is another potential field of improvement. Right now, when displaying a page refreshed every 15 minutes and very little other usage, the battery lasts for just under a week, which I already find good enough for my current needs.

I implemented switching Wi-Fi off and putting the device in light sleep mode, from which it is woken up via an interrupt signalled by the touch sensor. An improvement that could be considered is putting the device in ESP32's deep sleep mode overnight - while deep sleep and wake-on-touch can be used, the input lag would've been inconvenient for usage during the day. You might have a different use case in which it would be fast enough, though, especially if you don't need to be able to touch it often.

Implementing the Phoenix server

Note that I won't be doing a 100% walkthrough corresponding to the app that we uploaded to our GitHub repository - which contains way more than this, and has a refactored structure compared to what I'll show here in a very basic way. I'll also be following up on other aspects of the app, including transit data integration, in further articles.

We need to bootstrap the Elixir repository with both Phoenix and Nerves. I opted to generate a Phoenix app and then, once the basics are there, add Nerves tooling.

# Generate a stripped-down Phoenix app without unneeded stuff

mix phx.new station_server --no-html --no-gettext --no-live --no-ecto --no-assets --no-mailer --no-tailwind --no-esbuild

# Add to dependencies in mix.exs:

defp deps do

[

# ...

{:resvg, "~> 0.5.0"}, # for rendering SVG templates to PNG on the server

{:tzdata, "~> 1.1.3"} # will come in handy when processing weather data

]

end

We'll be processing two types of requests:

{

"touch": [

{

"begin": [

0,

500

],

"end": [

200,

600

],

"url": "/bus"

},

{

"begin":[

200,

500

],

"end":[

400,

600

],

"url": "/news"

},

{

"begin": [

400,

500

],

"end":[

600,

600

],

"url": "/todo"

}

],

"refresh_every": 900000,

"picture": "/images/weather.png"

}

picture and refresh_every are self-explanatory, while touch defines clickable regions on the screen and URLs to JSON endpoints with data payloads.

We'll start with the weather module. Let's configure the router first, we'll put JSON controllers in StationServerWeb.Pages and SVG-to-PNG controllers in StationServerWeb.Images.

defmodule StationServerWeb.Router do

use StationServerWeb, :router

pipeline :api do

plug :accepts, ["json"]

end

pipeline :image do

plug :accepts, ["png", "image/*"]

end

scope "/", StationServerWeb.Pages do

pipe_through :api

get "/weather", WeatherController, :index

end

scope "/images", StationServerWeb.Images do

pipe_through :image

get "/weather.png", WeatherController, :show

end

endThe page controllers are going to be slim and boring, each defining a single action and rendering :index - we'll configure the template structure properly just like in typical Phoenix usage, just instead of .html.heex templates we'll configure it to take .svg.eex templates.

Corresponding to the StationServerWeb.Pages.WeatherController module, let's create a JSON 'view' module like this:

defmodule StationServerWeb.Pages.WeatherJSON do

def index(_params \\\\ %{}) do

# Render a JSON payload to describe the page.

# The touch regions are defined to demonstrate the API.

%{

picture: "/images/weather.png",

touch: [

%{begin: [0, 0], end: [300, 100], url: "/departures"},

%{begin: [300, 0], end: [600, 100], url: "/news"}

],

refresh_every: 15 * 60 * 1000

}

end

endThis was easy and as plain-Phoenix as it gets, but now that we approach the image controllers: how do we wire it up to take SVG instead of HTML templates, and to additionally convert it to PNG for the HTTP responses? Well, here's what we'll do:

1. Create a custom wrapper around the embed_templates helper that overrides the template functions automatically generated by the presence of

show.svg.eex templates - appending an extra step of SVG-to-PNG conversion.

```elixir

defmodule StationServerWeb.EmbedTemplates do

defmacro embed_svg2png_templates(str, templates) do

defoverridables =

Enum.map(templates, fn template ->

quote do

defoverridable [{unquote(template), 1}]

end

end)

functions =

Enum.map(templates, fn template ->

quote do

def unquote(template)(assigns) do

unquote(__MODULE__).convert(super(assigns))

end

end

end)

quote do

embed_templates unquote(str)

unquote(defoverridables)

unquote(functions)

end

end

def convert(rendered_svg) do

case rendered_svg |> Resvg.svg_string_to_png_buffer(resources_dir: "/tmp") do

{:ok, png_data} ->

png_data

error ->

error

end

end

end

```2. Import the wrapper macro in the web module for easier access via use StationServerWeb, :svg2png

def svg2png do

quote do

use Phoenix.Component

import StationServerWeb.EmbedTemplates

end

end

3. Create PNG modules using the wrapper:

defmodule StationServerWeb.Images.WeatherPNG do

use StationServerWeb, :svg2png

embed_svg2png_templates("templates/weather/*", [:show])

endThat's it. Now you can just put .svg.eex templates in the folder you selected in the PNG module. I'm not going to walk through this here, you can just have a look at the weather template I have in the repository at GitHub - it's a file I created in a vector editor and then edited to some extent, mainly finessing layout, sizing and using a navigation panel helper that automatically wires up buttons for links to other pages.

You can notice that we're actually using the SVG image tag to embed remotely loaded images and keep the rendered templates self-contained. Whatever image binary data you fetch from elsewhere, you can just encode it with base64 and put in a xlink:href data URL. Also note that CSS filters are the easiest ways to process images for our templates to a certain extent - here, we're inverting colours in weather icons for legibility. Using a library such as vix or the good old imagemagick would require us to customize the Nerves system we'll be using (and even then it can get a bit problematic), so let's not complicate things too much.

<image

xlink:href="data:image/png;base64,<%= Base.encode64(...) %>"

style="filter: invert(1)"

/>I opted to use the OpenWeather API to retrieve current weather data for my location, and put it in sections for current weather and hourly and daily forecasts, as well as a module for loading OpenWeather's icons based on identifiers from the forecast. The API is a good choice for such a home project because it has a quite sizeable free tier for testing purposes.

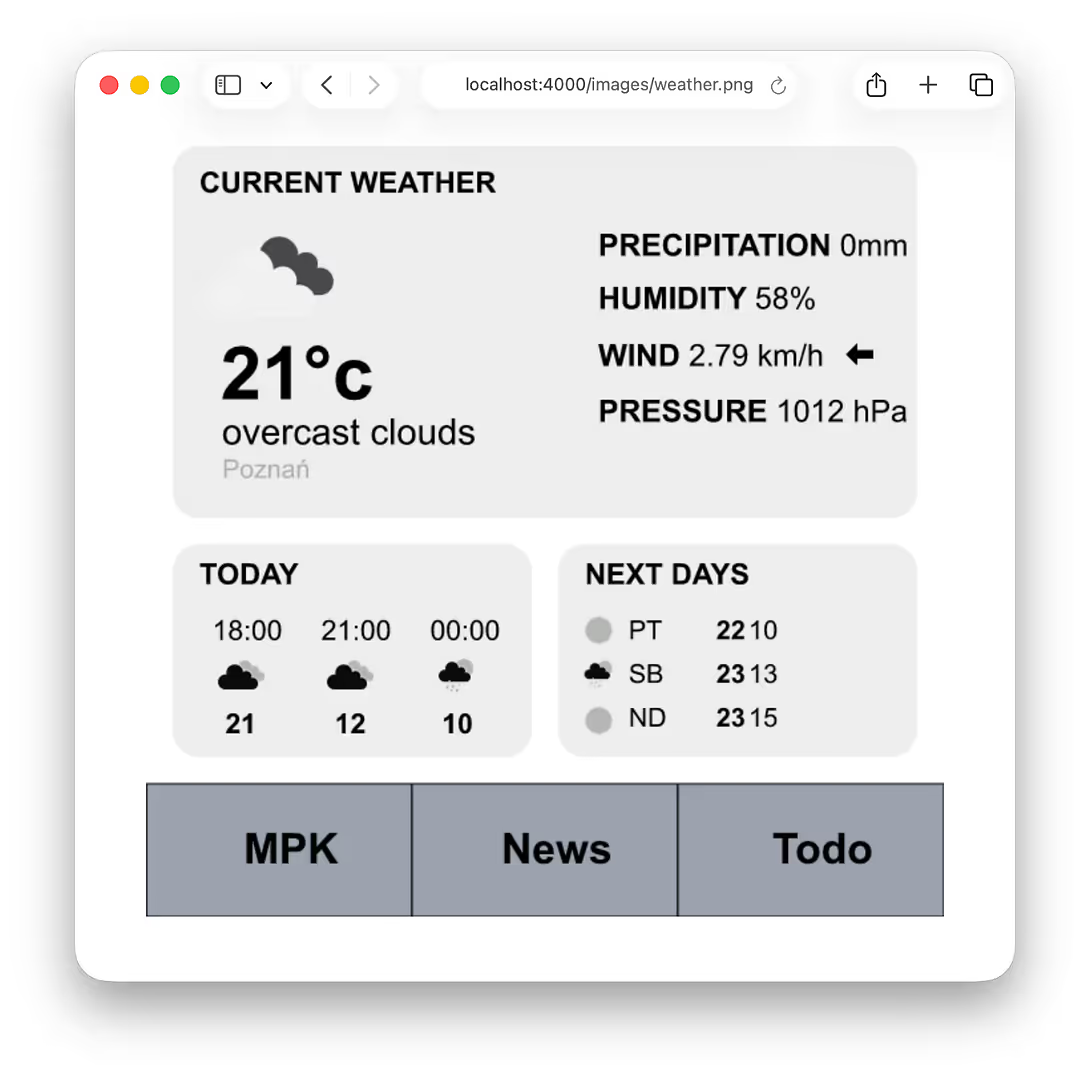

Once we've got the Phoenix basics in place, we can load the page. Here's what's currently living in the repository we've uploaded to GitHub at /images/weather.png when you load it in the browser:

And, when viewed in the Inkplate - after configuring it to fire HTTP requests to our development server - it looks like this - without and with the frontlight (photos taken with Polish locale):

In terms of legibility, your mileage may vary. You might want to adjust the sizes of elements in your SVG template, the fonts, the intensity of the Inkplate's frontlight, etc. What's important is that it works and allows navigating to other pages of the application via touch regions defined in the JSON payload, corresponding to what's rendered in the SVG template.

Now that we've got the thing up and running on our development machine, and we've connected the Inkplate to load pages from it, let's see what it'll take to deploy to a Raspberry Pi.

Configuring Raspberry Pi and Nerves

As stated before, using bare-metal BEAM with Nerves on the Pi is part of the whole exercise, and we'll get some benefits from that, too:

The Pi Zero isn't very powerful in terms of either CPU or RAM space, so it's good to have a healthy margin here. The BEAM should do a good enough job managing the memory, although let's be wary of the fact that the resvg library is a Rust NIF and, while Rust is known for robustness in memory handling, it's still not 100% memory-safe, and outside the BEAM's control - so monitoring RAM consumption is recommended.

Before we understand, let's recap some of the most important facts to understand about Nerves, its nature and purpose - in the context of our project. We'll not be copypasting from the docs, which explain the common terms (host, target, system, etc.):

Normally, we would use the nerves_bootstrap package's mix nerves.new generator to bootstrap the skeleton of a Nerves project, but as this time it was more convenient to start with Phoenix, Nerves can be added subsequently.

We need to configure a target (I named it :rpi ) different than the default MIX_TARGET=host that will have several convenience settings in place, typical for Nerves projects:

You can find the packages that we need to add to our target here, and the whole target configuration file here which needs to be loaded in non-host targets in the config.

Overall, several other steps should be taken for Nerves to be added to the project:

Let's get past this step and deal with the business.

Now, after we fetch the deps, we need to do this to compile the firmware bundle and, at the first time, burn it onto an SD card - the tool will autodetect your card reader and help you select the right device. When the Pi is already up and running in our local network, you can just upload over the air.

export MIX_TARGET=rpi

mix firmware

mix burn # first burn to SD card

mix upload station_server.local # subsequent deploymentsWhen you deploy the firmware to your Pi and load http://station_server.local:4000/images/weather.png you may notice that there are whitespaces instead of text captions. If you recall that Nerves is almost nothing more than BEAM and the kernel, you might guess why it happens: there are no fonts by default to speak of.

This is where the rootfs_overlay comes in handy: just put your fonts in rootfs_overlay/usr/share/fonts/truetype and, if you need to handle sans-serif, etc. designations in your layout styles, create a etc/fonts/conf.d/10-generic.conf file that assigns your fonts to these categories.

Next steps

Now that we have the backend code in place, we have plenty of further development opportunities for the system. These ideas include:

All of this has to be done with keeping energy consumption as low as possible in mind.

The first one, dealing with retrieval of public transit data (stop times, realtime data about delays, etc.) will be covered in our next article - we'll explore options available out there such as GTFS as well as aggregation APIs, pick the right choices for our use case and hardware, and demonstrate the implementation.

In fact, the transit data implementation is already there in our GitHub repository, if you'd like a spoiler. But in the next article we'll explain how we got to implement this specific solution, so thank you for reading and I encourage you to keep watching our blog for updates!

Want to power your product with Elixir? We’ve got you covered.

Related posts

Dive deeper into this topic with these related posts

You might also like

Discover more content from this category

In Elixir Meetup #12, Caleb Beers introduces Periscope, a powerful open-source tool designed to enhance debugging and introspection in Phoenix LiveView apps. It's essential for developers looking to improve their workflow and efficiency.

While Elixir isn't the programming language most commonly associated with embedded software development, it offers features that could make it an unexpectedly good choice in this domain. The qualities for which we appreciate Elixir so much - concurrency and fault tolerance - also play a key role in more complex embedded systems, where multiple tasks must run in parallel and recover gracefully from failures.

When you first launch your Elixir app, everything seems super fast. Lightweight processes and fast routing, fantastic WebSocket support. But when the app becomes a real, revenue-generating system, you suddenly need answers to difficult questions.