What language modeling is all about?

Discover the secrets of classical language modeling and learn how GPT-2 predicts text, handles tokenization, and adjusts creativity with temperature.

In recent years, large language models have gained enormous popularity and now, they can be found almost everywhere. Tools like ChatGPT, Google Gemini, or Claude are very useful, so more and more people start to use them. It’s important to have a basic understanding of how they work under the hood.

In my previous article, I walked through process of fine tuning a large language model for extractive question answering. That task, however, did not involve classical language modeling, which is a foundation of whole natural language processing field. This time I would like to explain how all those wonderful tools work using GPT-2 model as an example.

What is classical language modeling?

Classical language modeling is a core concept of natural language processing, where the primary goal is to predict the next part of a given sequence by generating a probability distribution for each possible character or word. Historically, there were many approaches to solve that problem. One of the earliest attempts was to use n-gram models, which create a statistical model by counting occurrences of all combinations in a large corpus of text. Later, as deep learning gained popularity, attempts were made to use neural networks, especially recurrent ones like GRU or LSTM. Then, in 2017, transformer architecture first appeared and completely dominated the field of natural language processing, quickly becoming considered as state of the art approach in language related tasks.

GPT overview

GPT-2 is a transformer model that leverages self-attention mechanism for better natural language understanding, which belongs to generative pre-trained transformer models family developed by OpenAI. It was introduced in 2019 and might seem a bit outdated in the rapidly evolving world of artificial intelligence, especially when compared to recent very large models like GPT-4 or GPT-4o. However, all models from GPT family use only the decoder of transformer architecture, which allows them to predict the next word in the sequence, so the general mechanism of text generation in such models remains the same.

As you might already know, the newest models are proprietary and they are not available on Hugging Face, nor in Bumblebee library. That’s why I’ll use GPT-2 model.

Installation

If you want to follow this article, I highly recommend using Livebook, since it’s a very convenient way to work with code, but a regular mix project will be sufficient too.

The only required libraries are Axon, Bumblebee, and Nx. Axon provides an abstraction for implementing neural networks, Bumblebee makes it possible to interact with the models available on the Hugging Face repository and Nx is responsible for all math operations that take place under the hood. However, if you want to accelerate inference with models you might find EXLA helpful.

Model download

First things first, model and tokenizer need to be downloaded to your local drive. To do that you have to specify which model and tokenizer you want to use.

checkpoint = {:hf, "gpt2”}{:ok, %{model: model, params: params}} = Bumblebee.load_model(checkpoint)

{:ok, tokenizer} = Bumblebee.load_tokenizer(checkpoint)Model size depends on chosen variant, but usually, it takes a while to finish.

If you want to find out how big the model is, you can simply inspect how many params are there.

Text tokenization

Ok, now it’s time to prepare input text that you want to complete. I’ll start with a simple unfinished sentence.

text = "My name is John and my main"Since computers and neural networks don’t understand plain text, we need to convert that to numbers. Each model has an associated vocabulary, which contains the most common words from used text corpus and because the collection of words is potentially infinite, it is very often limited to tens of thousands of the most popular words. In case of GPT-2 there are 50257 tokens in the vocabulary. If a word from outside the vocabulary occurs in the provided text, it will be replaced with a special token for unknown words.

input = Bumblebee.apply_tokenizer(tokenizer, text)As a result, we got a map with several keys, but the most important one is called “input_ids". Let’s see what’s that.

%{

"attention_mask" => #Nx.Tensor<

u32[1][7]

EXLA.Backend<host:0, 0.502782931.1833566222.97129>

[

[1, 1, 1, 1, 1, 1, 1]

]

>,

"input_ids" => #Nx.Tensor<

u32[1][7]

EXLA.Backend<host:0, 0.502782931.1833566222.97128>

[

[3666, 1438, 318, 1757, 290, 616, 1388]

]

>,

"token_type_ids" => #Nx.Tensor<

u32[1][7]

EXLA.Backend<host:0, 0.502782931.1833566222.97130>

[

[0, 0, 0, 0, 0, 0, 0]

]

>

}To verify if this is exactly what we provided above we can check what each token means.

Bumblebee.Tokenizer.decode(tokenizer, [3666, 1438, 318, 1757, 290, 616, 1388]))"My name is John and my main”Sometimes, while playing with tokenizers you may find weird characters like Ġ or similar. Don’t worry - it might happen, because tokenizers operate on byte level and might cut words in unexpected places, but as long as decoded text looks correct you should be good to go.

Model inference

With tokenized text we are ready to try GPT-2. To do so, simply pass information about model, downloaded weights and prepared input.

output = Axon.predict(model, params, input)After a second you should get a response from model, but it will look different from those known for example from ChatGPT. Let’s take a closer look on that.

%{

cache: #Axon.None<...>,

logits: #Nx.Tensor<

f32[1][7][50257]

EXLA.Backend<host:0, 0.502782931.1833566222.99355>

[

[

[-33.07360076904297, -32.33494186401367, -35.238014221191406, -34.77516555786133, -33.86668014526367, -34.452186584472656, -33.024147033691406, -33.58883285522461, -32.04574203491211, -34.41610336303711, -34.191070556640625, -30.15697479248047, -30.67748260498047, -30.273983001708984, -31.89931297302246, -34.27805709838867, -33.321781158447266, -33.531585693359375, -34.08525466918945, -34.172367095947266, -34.3142204284668, -34.81998062133789, -34.67438888549805, -34.668212890625, -34.99346160888672, -31.478322982788086, -33.271759033203125, -34.910186767578125, -33.903289794921875, -34.02199172973633, -32.871337890625, -34.703975677490234, -32.76276779174805, -33.283931732177734, -33.50212097167969, -33.37453079223633, -33.678306579589844, -33.35165023803711, -33.23114013671875, -33.39592742919922, -32.423004150390625, -33.56220626831055, -33.300479888916016, -33.525787353515625, -33.25764465332031, -33.98344802856445, -33.430992126464844, -33.69162368774414, ...],

...

]

]

>,

cross_attentions: #Axon.None<...>,

hidden_states: #Axon.None<...>,

attentions: #Axon.None<...>

}Similarly to tokenizer, model also returned a map with few keys. The one we are interested in is called “logits”. If we look on its shape we will see some known values.

%{logits: logits} = output

Nx.shape(logits){1, 7, 50257}We passed 1 input with 7 tokens and vocabulary size was 50257. So what each of this value means? Is it a probability? Well, probability distribution must sum up to one and all probabilities must be in range <0; 1>. If you look closely you will find values less than 0 or much greater than 1, so no, it is not a valid probability distribution yet, but it will be in a second.

Logits are raw, unnormalized output from last layer, but they can be converted to probability distribution by applying softmax function. It is implemented in Axon.Activations module and to use it we can simply invoke:

probabilities = Axon.Activations.softmax(logits)#Nx.Tensor<

f32[1][7][50257]

EXLA.Backend<host:0, 0.502782931.1833566222.99361>

[

[

[6.76328840199858e-4, 0.0014156418619677424, 7.765422924421728e-5, 1.233609509654343e-4, 3.060045710299164e-4, 1.7039061640389264e-4, 7.10616703145206e-4, 4.040131170768291e-4, 0.0018903894815593958, 1.7665114137344062e-4, 2.2123150120023638e-4, 0.012497768737375736, 0.007426408119499683, 0.011117738671600819, 0.0021884902380406857, 2.0280058379285038e-4, 5.27684751432389e-4, 4.2781655793078244e-4, 2.4592471891082823e-4, 2.2540823556482792e-4, 1.9559766224119812e-4, 1.1795457976404577e-4, 1.3644086720887572e-4, 1.3728612975683063e-4, 9.916831913869828e-5, 0.003334097098559141, 5.547520122490823e-4, 1.077801498468034e-4, 2.950044290628284e-4, 2.6198531850241125e-4, 8.27941345050931e-4, 1.3246315938886255e-4, 9.228921262547374e-4, 5.480401450768113e-4, 4.406095831654966e-4, 5.005709826946259e-4, 3.694345650728792e-4, 5.121563444845378e-4, 5.777493352070451e-4, 4.899742198176682e-4, 0.0012963087065145373, 4.149150918237865e-4, 5.390456644818187e-4, 4.3030441156588495e-4, 5.626375204883516e-4, 2.722803328651935e-4, 4.730911459773779e-4, 3.645473625510931e-4, 1.2901266745757312e-4, 3.226939879823476e-4, ...],

...

]

]

>Let’s check what happens when softmax is applied.

If we take a look on its formula we will notice the exponentiation of Euler’s number to each logit, what solves problem with negative numbers. Then, each exponentiated value is divided by the sum of them all, what ensures that they all will be smaller than 1 and their sum will be exactly 1. This transformation allows us to interpret current values as probabilities, representing model’s confidence about every token.

That means that now, we have access to seven probability distributions, one for each provided token. We can inspect them and see five tokens with highest probability.

{top_probabilities, top_tokens} = Nx.top_k(probabilities, k: 5)input["input_ids"]

|> Nx.to_flat_list()

|> Enum.with_index()

|> Enum.each(fn {token, idx} ->

top_probabilities = Nx.to_list(top_probabilities[0][idx])

top_tokens = Nx.to_list(top_tokens[0][idx])

decoded_token = Bumblebee.Tokenizer.decode(tokenizer, token)

IO.puts("#{token}\t#{decoded_token}")

[top_probabilities, top_tokens]

|> Enum.zip()

|> Enum.each(fn {probability, token} ->

rounded_probability = Float.round(probability, 5)

decoded_token = Bumblebee.Tokenizer.decode(tokenizer, token)

IO.puts("#{rounded_probability}\t#{token}\t#{decoded_token}")

end)

IO.puts("")

end)3666 My

0.01317 198 \n

0.0125 11 ,

0.01112 13 .

0.00743 12 -

0.00675 262 the

1438 name

0.84368 318 is

0.05219 338 's

0.01769 11 ,

0.01551 373 was

0.00672 290 and

318 is

0.0106 1757 John

0.00843 3700 James

0.00795 3271 David

0.0072 3899 Michael

0.00584 509 K

1757 John

0.04787 13 .

0.04535 11 ,

0.02295 290 and

0.02268 31780 Doe

0.01133 327 C

290 and

0.75795 314 I

0.03328 616 my

0.02476 428 this

0.01809 356 we

0.01027 340 it

616 my

0.1461 1438 name

0.12624 1641 family

0.0542 2802 mother

0.05075 2988 father

0.04649 3656 wife

1388 main

0.12841 3061 goal

0.11022 1693 job

0.04686 2962 focus

0.04646 4007 purpose

0.04367 2328 concernThis way you can see model predictions for all provided tokens, based on preceding sequence. Of course, the most interesting prediction is the last one, since this part was unknown and will be appended to existing text. That means that for sequence “My name is John and my main” model predicted following words: “goal”, “job”, “focus”, “purpose”, “concern” and all of them seem reasonable. Great! All we have to do is to pick one token, append to text and repeat this process again. But how to decide which token to choose? Usually they are drawn according to the returned probability distribution, so chance that word “goal” will be chosen is about 12.8%, for “job” it’s 11% and so on.

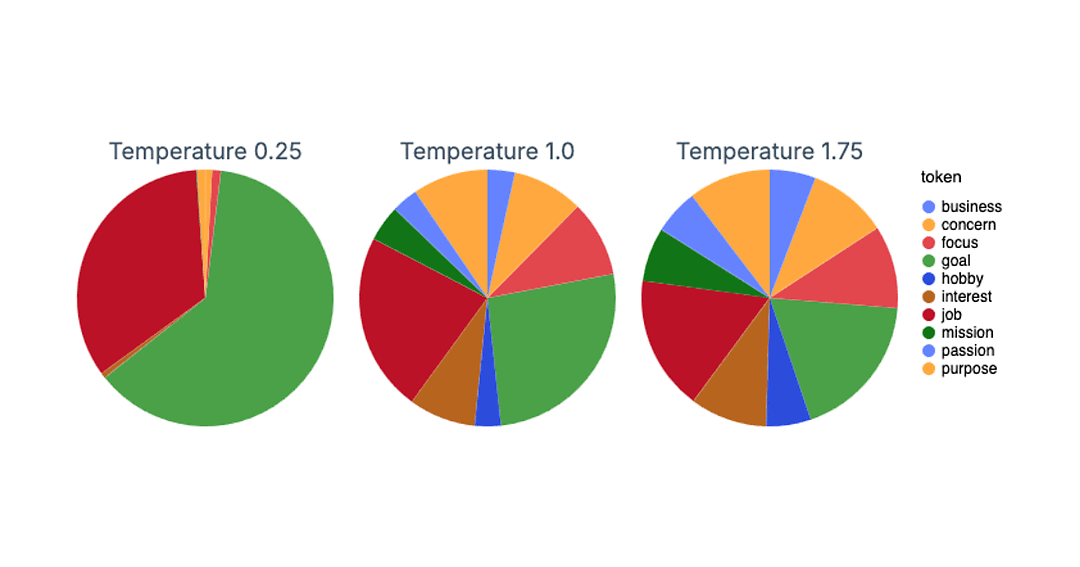

Temperature

You might have heard about temperature in context of large language models. But what’s that and how does it work? By manipulating the temperature we can adjust the probability distribution, affecting the behavior of the model.

Can you spot the difference? The lower temperature is, the less random response model will produce and the greater it is, the more unpredictable and creative it becomes. To keep distribution unchanged temperature must be set to 1.

Summary

And that’s it! It’s important to have a basic knowledge of how the increasingly popular large language models work. I hope this brief introduction helped you to understand that subject and got you interested in this topic.

Need a team to bring AI into your product?

Related posts

Dive deeper into this topic with these related posts

You might also like

Discover more content from this category

Tools like ChatGPT and DALL·E2 brought immense interest to AI. The go-to language to work with machine learning and artificial intelligence is Python, but market share may shift thanks to some tools created recently in Elixir programming language.

Conversational AI has emerged as a game-changer, transforming how businesses interact with their customers.

Learn how to process own data and train a model to extract answers from given contexts. Unlock the power of NLP with Elixir in this step-by-step guide.