Introduction to FLAME library

Scaling applications can be challenging, especially when only specific parts of your app are compute-intensive.

Imagine a scenario where your application handles image uploads, and for each upload, you need to resize the image to multiple resolutions. This process can be CPU-intensive, especially with large or frequent uploads.

The challenge is: how do you scale this task effectively without over-provisioning data processing resources for your entire application?

Some common approaches to solving this include:

- Scaling the entire application horizontally.

- Offloading the task to serverless functions like AWS Lambda.

- Using a modular scaling solution such as FLAME for targeted resource optimization.

In the next section, we’ll briefly compare these methods to provide context for why FLAME is a compelling choice, particularly for those needing modular scaling.

Approaches to Scaling Compute-Intensive Functions

When faced with compute-intensive tasks in your application, scaling effectively can make a huge difference in performance and cost you can adopt various strategies. Let’s explore three common approaches:

1. Horizontal Scaling

Overview:

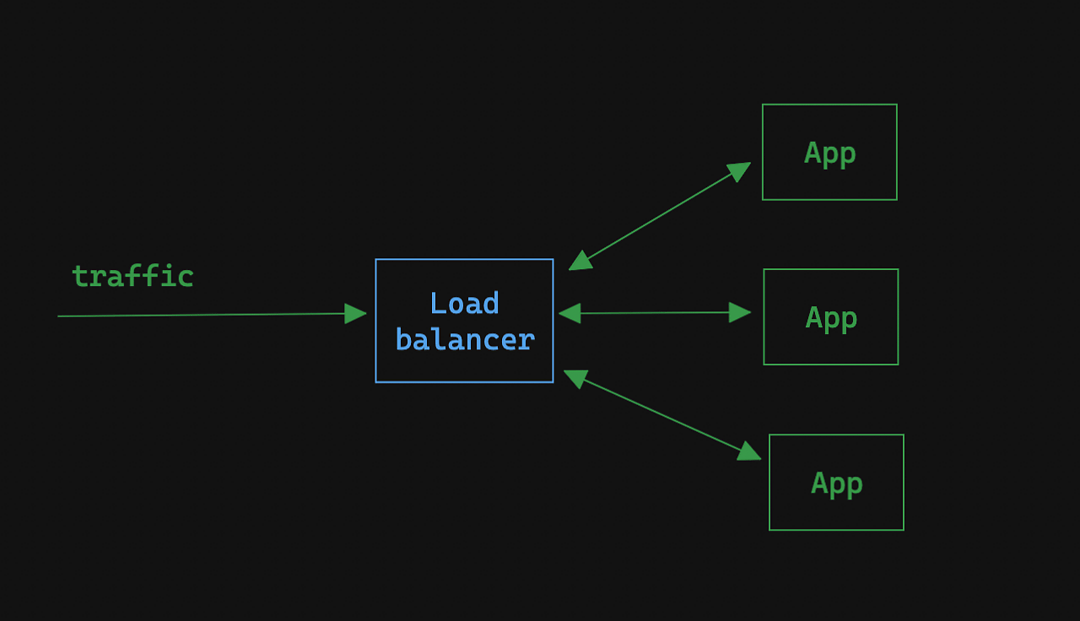

Horizontal scaling involves adding more instances of your application to handle increased load. This approach treats your application as a whole, scaling all components regardless of their individual resource needs. To ensure proper traffic distribution, a load balancer is typically required in front of the application instances.

How it works:

- Your application is duplicated across multiple servers or containers.

- A load balancer distributes incoming requests across these instances.

Pros:

- Simple to implement for applications designed for horizontal scaling.

- Effective for handling high overall traffic.

Cons:

- It is inefficient for applications with specific, compute-heavy tasks, as all parts of the app are scaled equally.

- This can result in higher costs and resource usage since non-compute-intensive parts also scale unnecessarily.

Diagram:

2. Serverless Functions

Serverless functions allow you to run code in response to events, without managing underlying infrastructure. A function, such as image resizing, is triggered and executed in a stateless, ephemeral environment.

How it works:

- An event, like an image upload, triggers a function.

- The function is executed in a serverless environment.

- Results are returned to the application.

Pros:

- Scales automatically with demand.

- You don't manage the underlying infrastructure

Cons:

- Execution time limits: Tasks exceeding the provider’s maximum time (e.g., 15 minutes for AWS Lambda function) are terminated.

- You will often need to rewrite parts of your app for external services.

Diagram:

3. Modular Scaling with FLAME

Overview:

FLAME enables modular scaling, allowing you to offload specific individual functions to ephemeral nodes. Unlike traditional horizontal scaling, FLAME does not require a load balancer. Instead, it dynamically provisions short-lived nodes to handle compute-heavy functions.

How it works:

- Code is wrapped in a

FLAME.call, which dispatches the work to a remote worker node. - The worker node performs the computation and sends the result back.

- After completion, the node is terminated.

Pros:

- Highly efficient: Only scale the specific tasks that need additional resources.

- Integrated with your application: No need to use external services and rewrite code for these.

Cons:

- Requires setup and configuration, such as a backend for provisioning nodes.

- Adds some management overhead compared to serverless functions

Diagram:

While horizontal scaling is straightforward, it can be resource-intensive and costly when only certain functions require scaling. Serverless functions eliminate the need to manage infrastructure but they often require rewriting parts of your application. FLAME offers a balanced solution, targeting only the tasks that need extra resources without incurring the downsides of traditional scaling methods.

This brings us to the question: what exactly is FLAME, and how does it solve these challenges for Elixir developers?

What is FLAME?

FLAME stands for Fleeting Lambda Application for Modular Execution, which is a pattern in software development in which you treat your entire application as lambda where modular parts can be executed on short-lived infrastructure.

How it works?

Using FLAME is straightforward: wrap your function code in a FLAME.call, and the framework takes care of executing it on a remote, ephemeral node. Once the execution is complete, the result is sent back to the parent node, and the remote node is terminated.

Here’s a quick example for resizing an image:

def resize_image(%Image{} = image) do

FLAME.call(MyApp.ImageRunner, fn ->

# logic for resizing the image

end)

endThis approach allows you to scale specific parts of your application dynamically as demand increases, ensuring you only pay for the resources required during execution. It’s a flexible and cost-effective way to handle computationally intensive tasks.

To enable this, you first need to add a FLAME.Pool to your application’s supervision tree.

Pool of runners

A FLAME.Pool module is responsible for managing a pool of FLAME.Runner processes. This module ensures efficient resource utilization and scalability by dynamically adjusting the number of active runners based on workload requirements.

Key features:

Automatic scaling:

- The pool can scale up and down based on the specified

:minand:maxvalues. - These settings allow the system to maintain a balance between resource availability and cost. Setting

:minensures a baseline number of runners are always available, minimizing cold starts at the cost of potentially idle resources. Setting:maximposes an upper limit, preventing excessive resource usage during peak loads.

- The pool can scale up and down based on the specified

Concurrency Control:

- The

:max_concurrencyoption specifies the maximum number of functions that can run concurrently on each node, ensuring that tasks do not overwhelm individual servers and degrade performance.

- The

Idle Node Shutdown:

- The

:idle_shutdown_afterparameter defines a timeout period. If a runner remains idle beyond this duration, it is terminated to avoid unnecessary costs.

- The

Example Configuration

Here’s how a pool might be configured in the supervision tree:

children = [

...,

{FLAME.Pool,

name: App.ImageRunner,

min: 1,

max: 10,

max_concurrency: 5,

idle_shutdown_after: 30_000},

]In this example:

- At least one runner (min: 1) is always active, reducing latency for incoming tasks.

- Up to ten runners (max: 10) can be spun up during high-load periods.

- Each runner can handle up to five concurrent tasks (max_concurrency: 5).

- Idle runners are terminated after 30 seconds (idle_shutdown_after: 30_000), optimizing resource usage.

Trade-offs:

- Setting :min to 0: Enables a "scale to zero" mode where no idle runners incur costs, but cold starts may introduce latency.

- Keeping :min above 0: Reduces latency by ensuring always-available runners but increases operational costs.

Runner

A FLAME.Runner is responsible for booting a new node and executing a function on it. Each runner represents a remote server provisioned specifically for task execution.

Interaction with the Backend

The actual provisioning of remote servers and application deployment is handled by the FLAME.Backend. This separation of responsibilities ensures modularity and allows for custom backend implementations tailored to specific cloud providers or infrastructure.

Backend and infrastructure management

Provisioning remote compute resources and applications is done by backend module that implements the FLAME.Backend behavior. This setup allows the parent application to issue API calls to access a configured cloud provider for provisioning the necessary compute resources and deploying the application.

For example, the Fly.io backend leverages the Fly.io platform to dynamically deploy and run applications as packaged Docker images. The Fly API is used to spin up new machines with the same image that the parent application is running. This makes the process seamless for developers, enabling on-demand scalability without manual intervention.

Build Your Own Backend

The flexibility of the FLAME.Backend behavior allows developers to implement custom backends for any cloud provider or infrastructure setup. Whether you use AWS, Google Cloud Platform (GCP) or Azure, you can extend and configure FLAME to suit your specific needs.

This capability ensures that FLAME can integrate into diverse environments, making it adaptable to organizations with unique software requirements or those using less conventional platforms.

Once the application is up and running on the remote node, it hands off control to the FLAME.Terminator.

Terminator

The FLAME.Terminator is a process that operates on remote nodes, managing the lifecycle and ensuring communication between the remote node and the parent application.

It serves as a safeguard for remote function execution by enforcing strict deadlines, monitoring the parent node, and handling system shutdowns.

Key responsibilities:

Remote Procedure Call (RPC) Deadlines

- All FLAME calls are executed with a specified timeout to ensure they do not run indefinitely.

- When a runner executes a function on a remote node, it checks in with the Terminator, which sets an execution deadline. If the function exceeds this deadline, the Terminator forcefully terminates the process to prevent resource overuse or system instability.

Parent Node Monitoring:

- Upon startup, the Terminator establishes a connection to the parent node and continuously monitors it. This ensures a two-way communication channel that keeps the parent informed of the node's status.

- If the connection fails to establish within a failsafe period or is lost unexpectedly, the Terminator initiates a shutdown of the remote system to maintain consistency and prevent resource leaks.

Failsafe Mechanism:

- By monitoring the node's lifecycle and enforcing deadlines, the Terminator acts as a failsafe, preventing misbehaving processes or lost communication from impacting the overall application.

Execution Flow Summary

Now that we’ve explored the modules and components of the FLAME, let’s summarize how the flow of FLAME.call works in detail:

Invocation:

When

FLAME.callis invoked, theFLAME.Poolchecks for an availableFLAME.Runnerprocess to execute the function.Runner Allocation:

- If a runner is available, it is reused.

If no runner is available:

- The

FLAME.Poolspawns a newFLAME.Runnerprocess. - The

FLAME.Runnerprovisions a remote server using the configuredFLAME.Backendand deploys the application on the server. Upon successful provisioning:

- A

FLAME.Terminatorprocess is spawned on the remote node, and its PID is returned. TheFLAME.Runnerbegins monitoring this terminator. - The new runner’s PID is returned for task execution.

- A

- The

Execution:

- The selected runner executes the given function remotely using Node.spawn_monitor and sets a deadline for the

FLAME.Terminatorto ensure the remote node shuts down after the specified time. - The function runs on the remote node, and the result is sent back to the calling process.

- The selected runner executes the given function remotely using Node.spawn_monitor and sets a deadline for the

Summary

This detailed flow ties together the high-level concepts with the inner workings of the FLAME. By leveraging its modular design and remote execution capabilities, FLAME ensures flexibility, scalability, and efficient resource management.

Want to power your product with Elixir? We’ve got you covered.

Related posts

Dive deeper into this topic with these related posts

You might also like

Discover more content from this category

The Elixir programming language has gained popularity recently due to its simplicity and efficiency. As a result, many SaaS companies are now using Elixir & Phoenix as the base of their technology stack.

Elixir is a great choice language for IoT devices due to its fault-tolerant nature, but also because of the ability to handle a lot of concurrent I/O operations thanks to the BEAM scheduler running under the hood.

There's hardly any more misunderstood element of Elixir than its metaprogramming power. The way to understand it is actually not to try too hard to wrap your head around it.