Absinthe with Phoenix Framework - a guide to properly get started with GraphQL using Elixir

The goal of this tutorial is to lay out the basics about queries, mutations, subscriptions, and everything around.

This is a guide about implementing a GraphQL server in Elixir using the Phoenix Framework with Absinthe. Absinthe is an all-in-one GraphQL toolkit to create APIs. You will learn how to correctly set up an Elixir & Phoenix project to handle GraphQL requests, how to use Absinthe DSL to define queries, mutations, and subscriptions, how to structure the app before it gets messy, and some things about middleware, tests, auth, and more.

Note: This guide assumes you have basic knowladge about Phoenix and GraphQL.

Getting started

Let's start building the app. The first step, as always, is to initialize a project. So, let's create a new Elixir & Phoenix project without assets (Tailwind, Esbuild) and HTML. We want to create a GraphQL API so, these are not needed.

mix phx.new app --no-assets --no-htmlI have prepared a GitHub repository that follows the tutorial. You can take a look when you are stuck.

Install dependencies

To get started with Absinthe, we need to add it as a dependency.

# mix.exs

{:absinthe, "~> 1.7"},

{:absinthe_plug, "~> 1.5"}And install the new dependencies.

mix deps.getConfigure the formatter

This step is optional but recommended and adds formatter support for Absinthe's DSL and GraphQL files.

# .formatter.exs

import_deps: [..., :absinthe],

plugins: [..., Absinthe.Formatter],

inputs: [..., "{lib,priv}/**/*.{gql,graphql}"]Basic configuration

Without further ado, let's configure the app.

Configure Plug's parser

The first thing we have to configure is the Plug's Parser. To configure the parser we have to add Absinthe.Plug.Parser to the parsers option, this will add support for incoming requests of type application/graphql by extracting the query from the body.

# lib/app_web/endpoint.ex

plug Plug.Parsers,

parsers: [..., Absinthe.Plug.Parser],

...Configure router

Absinthe's documentation shows a few ways how to use Absinthe.Plug to expose a GraphQL API. We will create a new Plug router, and forward it from the main Phoenix router. This way we do not limit ourselves when in the future we would want to add some sort of other routes.

# lib/app_web/graphql/router.ex

defmodule AppWeb.GraphQl.Router do

use Plug.Router

plug :match

plug :dispatch

forward "/graphql",

to: Absinthe.Plug,

init_opts: []

endAs you can see, we have forwarded the /graphql path to Absinthe.Plug, and that's almost all. I have left an empty init_opts option because the route will not work without a schema. We will work on that in the next step. All available options.

Now, we have to forward the GraphQL router to the main router. This will expose the API to the /api/graphql path.

# lib/app_web/router.ex

scope "/", AppWeb do

forward "/api", GraphQl.Router

endAbout GraphiQL

As with most of the backend GraphQL solutions, Elixir Absinthe provides a GraphiQL playground via the Absinthe.Plug.GraphiQL plug. There is support for multiple different interfaces.

# lib/app_web/graphql/router.ex

if Application.compile_env(:app, :dev_routes) do

forward "/graphiql",

to: Absinthe.Plug.GraphiQL,

init_opts: [

interface: :playground

]

endBasic schema

Everything in GraphQL is schema-driven, we define types, queries, and everything else in the schema. We use the query language to request data from a server. So let's create our first schema.

Define starting schema

To start with our schema, we have to use the Absinthe.Schema module. The module allows us to:

- define root types for queries, mutations, and subscriptions using the corresponding macros,

- and expand the schema using plugins, middleware, and context functions.

At this point, it is a good idea to import custom types from Absinthe.Type.Custom by using the import_types macro. This will allow us to use some of the native Elixir types, e.g., Decimal and DateTime.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

use Absinthe.Schema

# datetime, naive_datetime, decimal

import_types Absinthe.Type.Custom

# query do

# end

# mutation do

# end

# subscription do

# end

endLet's make use of the schema and add the schema option in init_opts for GraphQL and GraphiQL routes. The value of the option should point to the module where the schema is defined.

Note: You can define multiple schemas, for example: one schema with every possible field for GraphQL route and another with only public fields for GraphiQL route.

# lib/app_web/graphql/router.ex

init_opts: [

schema: AppWeb.GraphQl.Schema

]Now let's create some resources that we can work on. Later, we will replace users with the phx.gen.auth.

mix phx.gen.context Accounts User users email:string

mix phx.gen.context Blog Post posts title:string user_id:references:usersCreate the first type

GraphQL types describe structures of queried data. To define a type in the Absinthe schema, we use an object macro and a field macro inside the object macro.

# lib/app_web/graphql/schema.ex

# object :post, name: "PostType" do

object :post do

field :id, non_null(:id)

field :title, :string

field :user_id, non_null(:id)

field :inserted_at, non_null(:naive_datetime)

field :updated_at, non_null(:naive_datetime)

endLet's break down the example above:

object :post dodefines a GraphQL object named "Post".object :post, name: "PostType" dodefines a GraphQL object named "PostType". In the Absinthe schema, we would still use:post.field :title, :stringdefines a string field named "title".field :id, non_null(:id)defines a field with the type of:id,non_nullis one of two available utility functions, this function will make the field non-nullable. The second utility function islist_ofwhich will wrap a given type in a list.

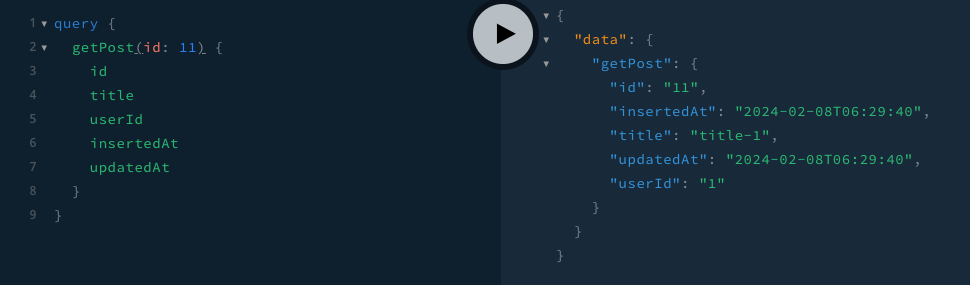

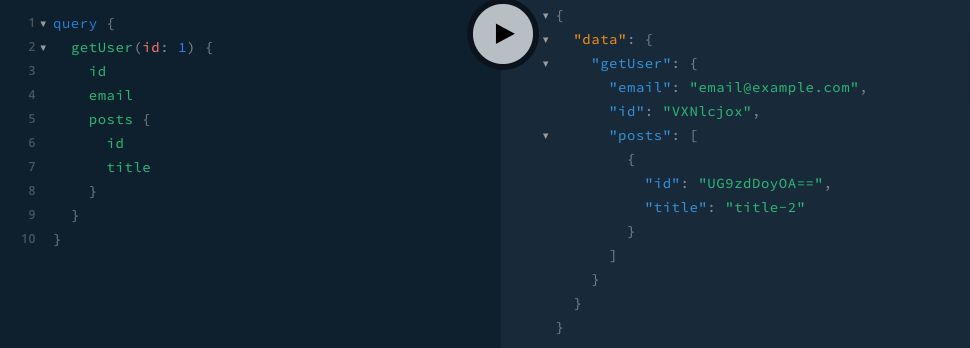

Create the first query

GraphQL queries are a way to request specific data from a server flexibly and efficiently. To create a query, we have to define a field inside the do-block of the query macro.

# lib/app_web/graphql/schema.ex

query do

field :get_post, :post do

arg :id, non_null(:id)

resolve fn %{id: id}, _ -> {:ok, App.Blog.get_post(id)} end

end

endLet's break down the example above:

field :get_post, :post dodefines a GraphQL field named "GetPost" with a return type of:postthat is possibly null. As you can imagine the:posttype is the object that we have defined earlier.arg :id, non_null(:id)defines an argument that the query will accept, in this case, usage ofnon_nullindicates that the argument is required.resolve fn %{id: id}, _ -> {:ok, App.Blog.get_post(id)} end, this is the resolver function for the query. Resolvers are responsible for fetching the actual data for a query. In this case, the function is of an arity of 2. The first argument is a map that contains arguments that were provided to a query, in this example, the map will always contain an id. The second argument is anAbsinthe.Resolutionstruct. The resolver function returns a tuple with the:okatom, which indicates that the query is successful.

Note: Under the cover

query,mutationandsubscriptionmacros are just objects with a custom name, e.g.,mutation-> "RootMutationType".

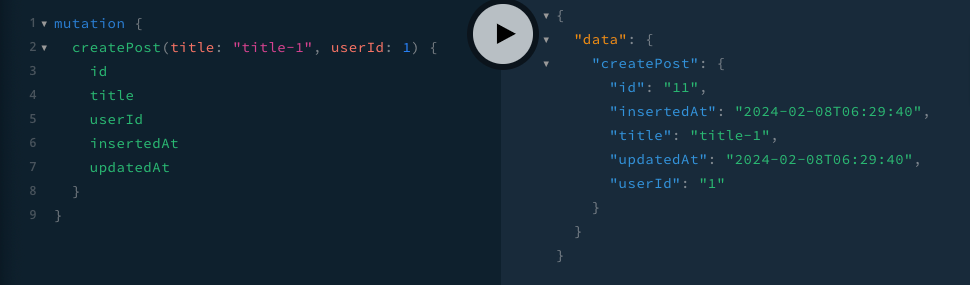

Create the first mutation

GraphQL mutations are a way to modify data on the server. To create a mutation, we have to define a field inside the do-block of the mutation macro.

# lib/app_web/graphql/schema.ex

mutation do

field :create_post, non_null(:post) do

arg :title, non_null(:string)

arg :user_id, non_null(:id)

resolve fn args, _ -> App.Blog.create_post(args) end

end

endLet's break down the example above:

field :create_post, non_null(:post) dodefines a GraphQL field named "CreatePost" with a return type of:post.arg :title, non_null(:string)defines an argument that the mutation will accept.resolve fn args, _ -> {:ok, App.Blog.create_post(args)} end, this is the resolver function for the mutation.

Note: Instead of

field :create_post, non_null(:post) dowe can writefield :create_post, type: non_null(:post) doand the result will be the same.

Input objects

Input objects are used to represent nested input structures in GraphQL query or mutation. While regular objects in GraphQL are used only to represent output types, input objects are specifically designed to handle input arguments. To create an input we have to use the input_object macro and field macros to define fields.

# lib/app_web/graphql/schema.ex

input_object :create_post_input do

field :title, non_null(:string)

field :user_id, non_null(:id)

end

mutation do

field :create_post, type: non_null(:post) do

arg :input, non_null(:create_post_input)

resolve fn %{input: args}, _ -> App.Blog.create_post(args) end

end

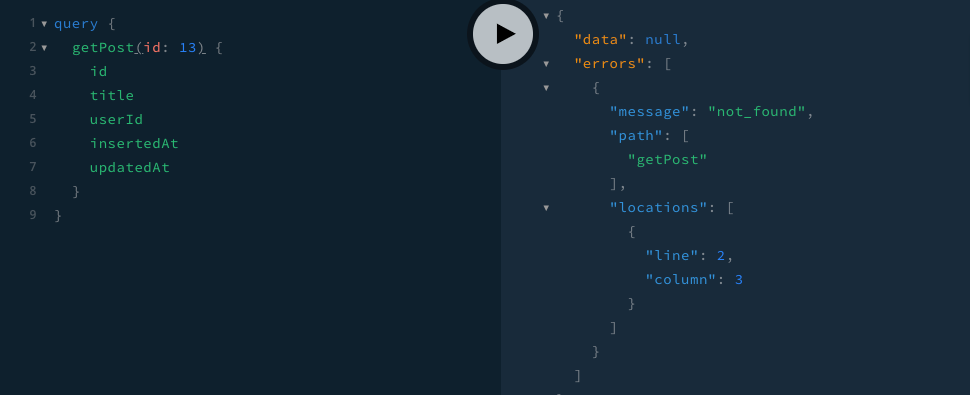

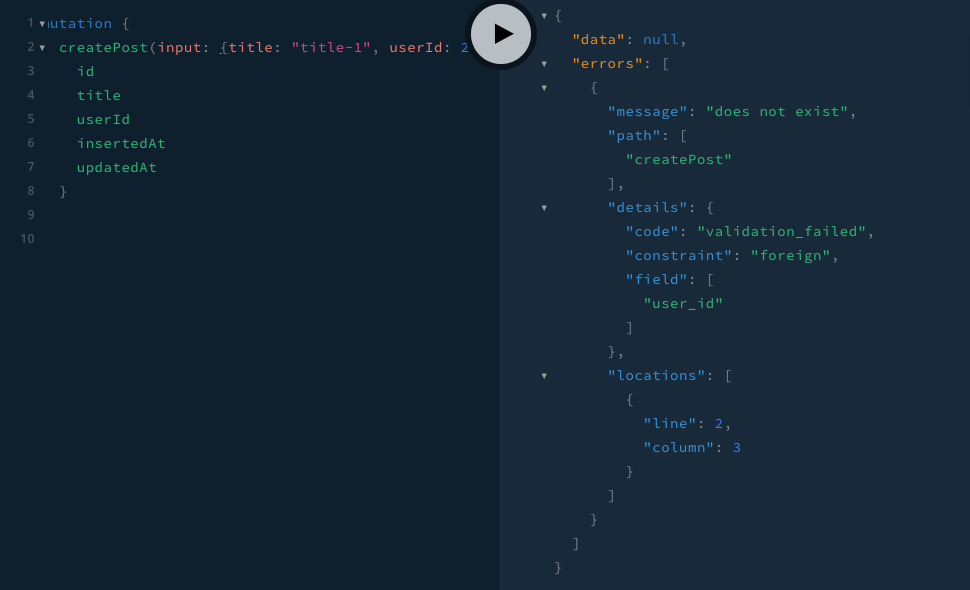

endReturning errors

We have learned that to return data, we have to return a tuple with :ok as the first value from a resolve function. Errors are returned in a very similar manner, instead of :ok we have to return :error. As the value of the error, we can return:

- A simple string.

- A map or keyword list that includes a "message" key along and any additional serializable metadata.

- A collection comprising various combinations of the above structures.

- Any other value that is compatible with the

to_string/1function, e.g., atom, number.

{:error, "Invalid"}

# Custom errors

{:error, %{message: "Invalid", details: %{type: "string"}}}

# Multiple errors

{:error, ["Invalid", %{message: "Invalid", details: %{type: "string"}}]}Here is an example of how to return an error when a post is not found. Please note that I have changed the return type to return non-nullable post.

# lib/app_web/graphql/schema.ex

alias App.Blog.Post

...

field :get_post, non_null(:post) do

arg :id, non_null(:id)

resolve fn %{id: id}, _ ->

case App.Blog.get_post(id) do

%Post{} = post -> {:ok, post}

_ -> {:error, :not_found}

end

end

end

Resolvers

Resolvers are functions responsible for retrieving or modifying the data from a server. So far we have defined some in-line resolvers using the resolve macro. The better way of handling resolvers is to create them outside of a query or mutation, by doing that we can easily pattern-match the arguments.

# lib/app_web/graphql/schema.ex

def get_post(%{id: id}, _resolution) do

case App.Blog.get_post(id) do

%Post{} = post -> {:ok, post}

_ -> {:error, :not_found}

end

endAs you can see we still are using the resolve macro.

# lib/app_web/graphql/schema.ex

field :get_post, non_null(:post) do

arg :id, non_null(:id)

resolve &get_post/2

endWhat is a resolution?

The last argument in a resolver function is referred to as the resolution argument. This argument provides information about the current state of the resolution process and includes details that the resolver may use or interact with. The resolution is a map containing various key-value pairs, and its specific structure includes information such as the parent result, arguments, context, and execution state. The most common use case for the resolution is an implementation of custom error handling.

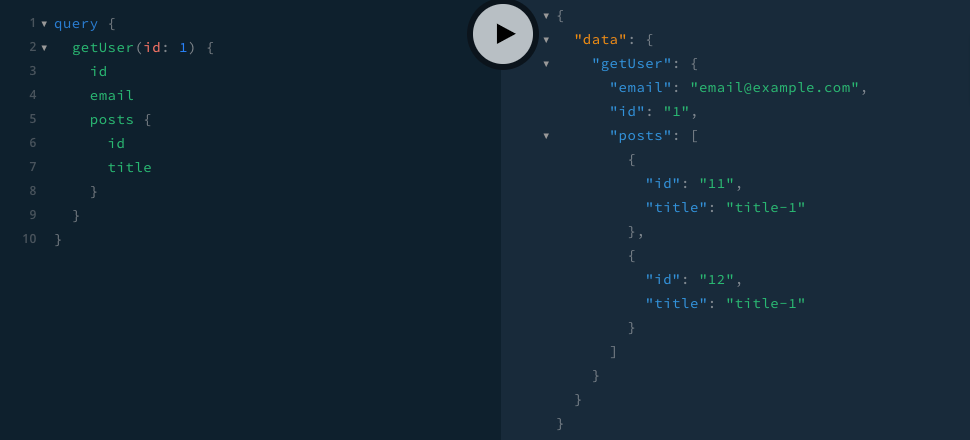

Handling nested data

GraphQL's ability to represent nested and hierarchical data structures is one of its key strengths, and Elixir Absinthe provides us with a simple way of doing so. We will use the resolve macro again, but instead of providing a function with an arity of 2, we will provide one with an arity of 3. The only difference is that the first argument is now a parent, the second is arguments and the third is a resolution. The parent is an object that is one higher in the hierarchy. In the example below, the parent will be the user. Based on the parent, we can fetch all of the user's posts.

# lib/app_web/graphql/schema.ex

object :user do

field :id, non_null(:id)

field :email, :string

field :inserted_at, non_null(:datetime)

field :updated_at, non_null(:datetime)

# field :posts, list_of(:post), resolve: &list_posts/3

field :posts, list_of(:post) do

arg :date, :date

resolve &list_posts/3

end

end

def list_posts(%App.Accounts.User{} = author, args, _resolution) do

{:ok, App.Blog.list_posts(author, args)}

end

def list_posts(_parent, args, _resolution) do

{:ok, App.Blog.list_posts(args)}

end

The N + 1 problem

With the nested data, a common problem arises the N+1 problem. The N+1 problem occurs when resolving a list of items that have relationships with other types. To better explain the issue, let's take a look at the example below. The post has an author; just defining this will result in excessive database queries.

# lib/app_web/graphql/schema.ex

object :post do

...

field :user, non_null(:user), resolve: &get_user/3

end

def get_user(%{user_id: user_id}, _args, _resolution) do

{:ok, App.Accounts.get_user(user_id)}

endThe issue shows itself when we want to list posts with their authors. We will use one SQL query to list the posts and one query for each post to get the author. This is where the name comes from N+1:

- N - number of posts, for each we have to fetch the author.

- 1 - query to list the posts.

query {

listPosts {

id

user {

id

}

}

}How do we remedy the N+1 issue? There are two easy ways to solve the issue. The first one is to use joins in the resolver function of a query or mutation. The second one, which is described in the documentation, is to batch the lookups. It means that instead of fetching the author for each post, we can fetch every needed author in one query. We just have to aggregate every user_id from the fetched posts, and then query the needed data. Later we will learn about the Dataloader library which will batch data for us with little to no effort.

The batch helper

Absinthe provides us with the batch helper function. As the name suggests the function will help us batch data. In the code snippet below, the batch helper function is used to efficiently fetch user data by their IDs associated with each post. The list_users_by_ids/2 function retrieves the users from the database based on the provided IDs, and the batch result handler extracts the user data for the specific post.

# lib/app_web/graphql/schema.ex

import Ecto.Query

import Absinthe.Resolution.Helpers

alias App.Accounts.User

object :post do

...

field :user, non_null(:user) do

resolve fn post, _args, _resolution ->

batch({__MODULE__, :list_users_by_ids, User}, post.user_id, fn batch_results ->

{:ok, Map.get(batch_results, post.user_id)}

end)

end

end

end

def list_users_by_ids(_model, user_ids) do

users = App.Repo.all from u in User, where: u.id in ^user_ids

Map.new(users, fn user -> {user.id, user} end)

endDocument the schema

There is a lot of talk about how GraphQL is self-documenting. I agree with that statement to some degree. Objects/fields named, for example, "CreatePostInput", "Id" or even "DeletePost" don't need documentation, they are self-explanatory. But sometimes the name alone is not enough, to add a description to a given field/object we have to use @desc, just put it above the given field or object.

# lib/app_web/graphql/schema.ex

@desc "This is a user"

object :user do

@desc "This is the user's id"

field :id, non_null(:id)

...

endEnums

GraphQL enums provide a way to define a set of named values for a field. Enums are useful when you want to restrict a field to a predefined set of options, ensuring that only specific values are allowed. To create an enum, we have to use the enum macro, and to define possible values, the value macro. The value macro can accept the as option that will provide custom value mapping.

# lib/app_web/graphql/schema.ex

@desc "You can describe enums as well"

enum :post_type do

value :announcement

value :advertisement

value :tutorial, as: "GUIDE"

endEnums are used just like normal types.

# lib/app_web/graphql/schema.ex

object :post do

...

field :type, :post_type

endHow to properly structure the app (the Absinthe part)

This part of the guide is very opinionated. You probably noticed that I've placed all the Absinthe-related files under the lib/app_web/graphql path. As with the router, I want to separate everything GraphQL-related in case we have to expand the app in some other manner.

I will present you with two different but very similar structures. Choose what fits you the most:

- In the first structure, we will group everything on the operation level. It means that all resolvers will be in the resolvers folder, all mutations will be in the mutations folder, etc. Then we will create a folder for every context inside.

lib/

app_web/

graphql/

(queries|mutations|subscriptions|types).ex

resolvers/blog/post_resolvers.ex

queries/blog/post_queries.ex

mutations/blog/post_mutations.ex

subscriptions/blog/post_subscriptions.ex

types/blog/post_types.ex- The second structure is similar but doesn't separate everything on the operation level. Instead, everything is separated on the context level, e.g., the blog is a parent folder for all types, mutations, etc. for a given resource in this context.

lib/

app_web/

graphql/

operations/

(queries|mutations|subscriptions|types).ex

blog/post/

post_resolvers.ex

post_queries.ex

post_mutations.ex

post_subscriptions.ex

post_types.exThe relocation of code and the import of types

To start with the moving out part, we have to use the Absinthe.Schema.Notation module. This module allows us to use the same DSL as in the schema.

Types

Let's start with types, use the notation, and just copy the type objects.

# lib/app_web/graphql/types/blog/post_types.ex

# lib/app_web/graphql/operations/blog/post/post_types.ex

defmodule AppWeb.GraphQl.Blog.PostTypes do

use Absinthe.Schema.Notation

object :post do

...

end

endInstead of importing everything in the schema let's create a new module where we will import all the types.

# lib/app_web/graphql/types.ex

# lib/app_web/graphql/operations/types.ex

defmodule AppWeb.GraphQl.Types do

use Absinthe.Schema.Notation

# Blog

import_types AppWeb.GraphQl.Blog.PostTypes

endAnd import the types from the module above in the schema.

lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

use Absinthe.Schema.Notation

...

import_types AppWeb.GraphQl.Types

endResolvers

Resolvers are just normal Elixir functions, so just copy these to the new place.

# lib/app_web/graphql/resolvers/blog/post_resolvers.ex

# lib/app_web/graphql/operations/blog/post/post_resolvers.ex

defmodule AppWeb.GraphQl.Blog.PostResolvers do

alias App.Blog

alias App.Blog.Post

def get_post(%{id: id}, _) do

case Blog.get_post(id) do

%Post{} = post -> {:ok, post}

_ -> {:error, :not_found}

end

end

endOperations

To move out queries, mutations, and subscriptions we have to wrap these fields in an object. In this example I have named the objects :post_queries, :post_mutations, and :post_subscriptions.

# lib/app_web/graphql/(queries|mutations|subscriptions)/blog/post_queries.ex

# lib/app_web/graphql/operations/blog/post/post_queries.ex

defmodule AppWeb.GraphQl.Blog.PostQueries do

use Absinthe.Schema.Notation

alias AppWeb.GraphQl.Blog.PostResolvers

object :post_queries do

field :get_post, non_null(:post) do

arg :id, non_null(:id)

resolve &PostResolvers.get_post/2

end

end

endAs with the types, let's create files where we import these operations.

# lib/app_web/graphql/queries.ex

# lib/app_web/graphql/operations/queries.ex

defmodule AppWeb.GraphQl.Queries do

use Absinthe.Schema.Notation

# Blog

import_types AppWeb.GraphQl.Blog.PostQueries

endAnd import the types from these modules. Additionally, we have to import fields from these types to corresponding do-blocks.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

use Absinthe.Schema.Notation

...

import_types AppWeb.GraphQl.Queries

import_types AppWeb.GraphQl.Mutations

import_types AppWeb.GraphQl.Subscriptions

query do

import_fields :post_queries

end

mutation do

import_fields :post_mutations

end

subscription do

import_fields :post_subscriptions

end

endHandle changeset errors

Unfortunately, Absinthe does not come with changeset error handling by default. The documentation suggests handling the errors with the Absinthe ErrorPayload library. I don't think it is the best idea. The main point I have against it is that it does not align with the GraphQL specification. Instead of returning errors under the errors key, it creates its way of handling errors and the returned data with payloads. Errors are under the messages key for some reason. So instead of using this library, we will create our changeset error handling with middleware.

What is middleware?

In Absinthe, middlewares are modules that allow you to intercept and modify the execution of a GraphQL field at various stages of the processing. Middleware provides a way to add custom logic, validation, or any other behavior to the execution of a GraphQL operation. These are applied in sequential order, allowing you to customize the handling of requests in a modular and composable manner.

Note: The

resolvemacro is a middleware under the hood.

Let's handle the errors with our first middleware

Absinthe provides us with the Absinthe.Middleware behavior. The behavior requires only one function - call. The first argument is a resolution, and the second is options that we can provide to the middleware. The function has to return the resolution, so a middleware is just a function that manipulates a resolution.

# lib/app_web/graphql/middleware/handle_changeset_errors.ex

defmodule AppWeb.GraphQl.Middleware.HandleChangesetErrors do

alias Ecto.Changeset

@behaviour Absinthe.Middleware

def call(resolution, _opts) do

%{

resolution

| errors:

Enum.flat_map(resolution.errors, fn

%Changeset{} = changeset -> handle_changeset(changeset)

error -> [error]

end)

}

end

defp handle_changeset(%{valid?: true}), do: []

defp handle_changeset(changeset) do

changeset

|> Changeset.traverse_errors(&put_message_into_changeset_error/1)

|> Enum.flat_map(fn {field, errors} ->

format_changeset_errors([field], errors)

end)

end

defp format_changeset_errors(path, errors) do

Enum.flat_map(errors, fn

%{message: message, validation: validation} ->

[

%{

message: message,

details: %{

code: :validation_failed,

validation: to_string(validation),

field: Enum.reverse(path)

}

}

]

%{message: message, constraint: constraint} ->

[

%{

message: message,

details: %{

code: :validation_failed,

constraint: to_string(constraint),

field: Enum.reverse(path)

}

}

]

{:message, message} when is_binary(message) ->

[

%{

message: Gettext.gettext(AppWeb.Gettext, message),

details: %{

code: :validation_failed,

field: Enum.reverse(path)

}

}

]

%{message: message} when is_binary(message) ->

[

%{

message: message,

details: %{

code: :validation_failed,

field: Enum.reverse(path)

}

}

]

# Handle multiple errors

{field, errors} when is_map(errors) or is_list(errors) ->

format_changeset_errors([field | path], errors)

# Handle nested changeset errors

error when is_map(error) ->

format_changeset_errors(path, error)

end)

end

defp put_message_into_changeset_error({msg, opts}) do

translated_message = Gettext.dgettext(AppWeb.Gettext, "errors", msg, opts)

Enum.into(opts, %{message: translated_message})

end

endNote: I will not focus on what the middleware is doing in detail, this is not the point of this guide.

As you can see the middleware manipulates the errors in the resolution, so we have to return the changeset errors from the resolver function.

def create_post(args, _resolution) do

# Change args in some way

with {:ok, %Post{} = post} <- Blog.create_post(args) do

# Do anything else

{:ok, post}

end

# This will return changeset errors

endUsing middleware per operation

One of the ways to use our middleware is to use the middleware macro. The macro can be used at any point inside the field macro's do-block. As our middleware takes in the result from the resolver and transforms it, we have to put the macro after the resolve macro.

# lib/app_web/graphql/mutations/blog/post_mutations.ex

defmodule AppWeb.GraphQl.Blog.PostMutations do

use Absinthe.Schema.Notation

alias AppWeb.GraphQl.Blog.PostResolvers

alias AppWeb.GraphQl.Middleware.HandleChangesetErrors

object :post_mutations do

field :create_post, non_null(:post) do

arg :input, non_null(:create_post_input)

resolve &PostResolvers.create_post/2

middleware HandleChangesetErrors

end

end

end

Using middlewares globally

Instead of adding the middleware to every possible mutation, which can become annoying. We can set up global middleware for mutations only. We have to define the middleware function in the schema definition. The third argument contains information about an object that is being evaluated, so we can pattern match against it and only add our middleware for mutations. We are adding our middleware at the end, which ensures that the resolver was called earlier.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

alias AppWeb.GraphQl.Middleware.HandleChangesetErrors

...

def middleware(middleware, _field, %{identifier: :mutation}) do

middleware ++ [HandleChangesetErrors]

end

def middleware(middleware, _field, _object) do

middleware

end

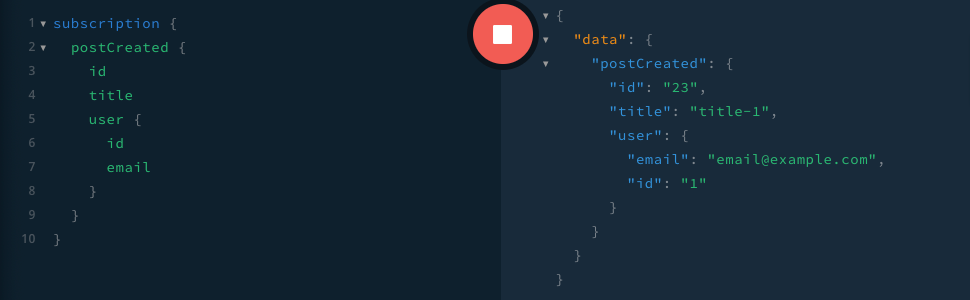

endSubscriptions

In GraphQL, subscriptions are a feature that allows clients to receive real-time updates from the server when specific events occur. While traditional GraphQL queries and mutations are used for request-response patterns, subscriptions enable a persistent connection between the GraphQL client and the server, allowing for asynchronous communication.

Configure the app to handle subscriptions

To get started, we first have to add some new dependencies.

# mix.exs

defp deps do

[

...,

{:phoenix_pubsub, "~> 2.0"},

{:absinthe_phoenix, "~> 2.0.2"}

]

endIf Pubsub is currently not configured, we have to configure it.

# config/config.exs

config :app, AppWeb.Endpoint,

...,

pubsub_server: App.PubSubThe next thing on the list is to add the App.Pubsub and Absinthe.Subscription modules to the children list of the start function.

# lib/app/application.ex

defmodule App.Application do

def start(_type, _args) do

children = [

...,

{Phoenix.PubSub, name: App.PubSub},

AppWeb.Endpoint,

# ↓ Have to be after pubsub and endpoint

{Absinthe.Subscription, AppWeb.Endpoint}

]

...

end

endAnd last but not least we have to update our endpoint to use Absinthe.Phoenix.Endpoint.

# lib/app_web/endpoint.ex

defmodule AppWeb.Endpoint do

use Phoenix.Framework, opt_app: :app

use Absinthe.Phoenix.Endpoint

...

endCreate and configure user_socket

Absinthe subscription requires sockets to work. So let's create one:

mix phx.gen.socket UserAdd the generated socket to the endpoint.

# lib/app_web/endpoint.ex

defmodule AppWeb.Endpoint do

socket "/api/graphql", AppWeb.UserSocket,

websocket: true,

longpoll: false

endAnd update our socket to use Absinthe.Phoenix.Socket.

# lib/app_web/channels/user_socket.ex

defmodule AppWeb.UserSocket do

use Phoenix.Socket

use Absinthe.Phoenix.Socket,

schema: AppWeb.GraphQl.Schema

endUpdate GraphiQL

If you are using GraphiQL, you have to update the GraphQL router.

# lib/app_web/graphql/router.ex

if Application.compile_env(:app, :dev_routes) do

forward "/graphiql",

to: Absinthe.Plug.GraphiQL,

init_opts: [

schema: AppWeb.GraphQl.Schema,

socket: AppWeb.UserSocket,

socket_url: "ws://localhost:4000/api/graphql",

interface: :playground

]

endCreate the first subscription

To create a subscription, we must define a field inside the do-block of the subscription macro. As with every field, we can add arguments, middleware, and resolvers. The difference is that the subscription fields require the config and trigger macros. Each of these macros has to take a function that will return a topic of a subscription. Topics are used to filter and route subscription events based on given criteria.

Note: I will follow the same structure as for mutations and queries.

# lib/app_web/graphql/subscriptions/blog/post_subscriptions.ex

defmodule AppWeb.GraphQl.Blog.PostSubscriptions do

use Absinthe.Schema.Notation

object :post_subscriptions do

field :post_created, non_null(:post) do

config fn _args, _resolution ->

{:ok, topic: "post_created"}

end

trigger :create_post, topic: fn _ -> "post_created" end

end

end

endLet's break down the example above:

field :post_created, non_null(:post) dodefines a GraphQL field named "PostCreated" with a return type of non-nullable:post.config fn _args, _resolution -> {:ok, topic: "post_created"} endthis function sets the topic for the subscription.trigger :create_post, topic: fn _ -> "post_created", defines a trigger for a given mutation, in this case, the subscription will be triggered after the:create_postmutation. The second argument is a function that returns topics, to return multiple topics return a list.

Note: Subscriptions have a default resolve function that will just return what is provided through the event, e.g.,

resolve fn post, _, _-> {:ok, post} end.

Note: Remember to import subscriptions in the schema.

Publish a subscription outside of the schema

Absinthe gives us a way to trigger a subscription outside the schema, without the usage of the trigger macro. The publish function takes 3 arguments:

- pubsub, we are using Absinthe with Phoenix, so in our case, we have to provide the endpoint.

- data that will be provided to the resolve function of the subscription.

- keyword list where the key is the name of a field and the value is a topic.

Absinthe.Subscription.publish(AppWeb.Endpoint, post, post_created: "post_created")Dynamic subscription topics

Subscriptions are just normal fields, so we can provide arguments to them. Based on these arguments we can create dynamic topics.

# lib/app_web/graphql/subscriptions/blog/post_subscriptions.ex

defmodule AppWeb.GraphQl.Blog.PostSubscriptions do

use Absinthe.Schema.Notation

object :post_subscriptions do

field :post_created, non_null(:post) do

arg :user_id, :id

config fn args, _resolution ->

case args do

%{user_id: user_id} ->

{:ok, topic: "post_created:#{user_id}"}

_ ->

{:ok, topic: "post_created"}

end

end

trigger :create_post, topic: fn %{user_id: user_id} ->

["post_created", "post_created:#{user_id}"]

end

end

end

endTests

Tests. Sadly, we have to test. The documentation outlines three different approaches:

- testing resolver functions.

- testing GraphQL document execution using the

Absinthe.runfunction. - testing the full HTTP request/response cycle.

I will focus on the third approach, recommended by the documentation because it exercises more of your application.

Create a helper function to post GraphQL requests

We can get started with testing right away, but I think it's better to create a helper that will help us with boilerplate code. Let's create a module that will contain all of our GraphQL helpers in the future.

The first helper function that will help us along is a function that will post a given query, and return a parsed JSON response. As you can see, the gql_post function has a response argument that defaults to 200. GraphQL returns 200 even when the query returns an error. The argument is here to handle the rare cases where the response will contain any other HTTP status.

# test/support/helpers/graphql_helpers.ex

defmodule AppWeb.GraphQlHelpers do

import Phoenix.ConnTest

import Plug.Conn

# Required by the post function from Phoenix.ConnTest

@endpoint AppWeb.Endpoint

def gql_post(options, response \\ 200) do

build_conn()

|> put_resp_content_type("application/json")

|> post("/api/graphql", options)

|> json_response(response)

end

endCreate GraphQL test case

Let's create a new ExUnit test case for the GraphQL tests.

# test/support/graphql_case.ex

defmodule AppWeb.GraphQlCase do

use ExUnit.CaseTemplate

using do

quote do

use AppWeb.ConnCase

import AppWeb.GraphQlHelpers

end

end

endTest the queries

Testing the GraphQL queries is very similar to just testing controllers. You send a request to the server and get a response with data. The next step is to check if the returned data is what you want, and that's it. The only difference is that you have to provide a query in the query key and variables to that query in the variables key.

# test/app_web/graphql/queries/blog/post_queries_test.exs

defmodule AppWeb.GraphQl.Blog.PostQueriesTest do

use AppWeb.GraphQlCase

alias App.BlogFixtures

describe "get_post" do

@get_post """

query($id: ID!) {

getPost(id: $id) {

id

title

}

}

"""

test "returns post when found" do

post = BlogFixtures.post_fixture()

assert %{

"data" => %{

"getPost" => %{

"id" => to_string(post.id),

"title" => post.title

}

}

} ==

gql_post(%{

query: @get_post,

variables: %{"id" => post.id}

})

end

test "returns 'not_found' error when missing" do

assert %{

"data" => nil,

"errors" => [%{"message" => "not_found"}]

} =

gql_post(%{

query: @get_post,

variables: %{"id" => -1}

})

end

end

endTest the mutations

Testing the mutations works the same as for queries.

# test/app_web/graphql/mutations/blog/post_mutations_test.exs

defmodule AppWeb.GraphQl.Blog.PostMutationsTest do

use AppWeb.GraphQlCase

alias App.AccountsFixtures

describe "create_post" do

@create_post """

mutation($input: CreatePostInput!) {

createPost(input: $input) {

id

title

userId

}

}

"""

test "creates post when input is valid" do

user = AccountsFixtures.user_fixture()

variables = %{

"input" => %{

"title" => "valid title",

"userId" => user.id

}

}

assert %{

"data" => %{

"createPost" => %{

"id" => _,

"title" => "valid title",

"userId" => user_id

}

}

} =

gql_post(%{

query: @create_post,

variables: variables

})

assert to_string(user.id) == user_id

end

test "returns error when input is invalid" do

user = AccountsFixtures.user_fixture()

variables = %{

"input" => %{

"title" => "bad",

"userId" => user.id

}

}

assert %{

"data" => nil,

"errors" => [

%{

"message" => "should be at least 4 character(s)",

"details" => %{"field" => ["title"]}

}

]

} =

gql_post(%{

query: @create_post,

variables: variables

})

end

end

endCreate GraphQL Subscription test case

Testing the subscription is different than testing queries or mutations. We have to create a socket, subscribe to a subscription, trigger the subscription, with mutation, and lastly check if the subscription returns what we want.

As with normal tests for GraphQL queries, let's create some helpers that will aid us. One for creating a socket, one for subscribing, and one for closing a socket. We don't need to create a new module for the helpers. We can define them right in the new ExUnit test case for subscriptions.

Note: The test will close a socket automatically but we can do the same by hand.

The channel test case isn't present by default, so we must create it first.

# test/support/channel_case.ex

defmodule AppWeb.ChannelCase do

use ExUnit.CaseTemplate

using do

quote do

# Import conveniences for testing with channels

import Phoenix.ChannelTest

import AppWeb.ChannelCase

# The default endpoint for testing

@endpoint AppWeb.Endpoint

end

end

setup tags do

App.DataCase.setup_sandbox(tags)

:ok

end

endAnd now it's time for the subscription case.

# test/support/subscription_case.ex

defmodule AppWeb.SubscriptionCase do

use ExUnit.CaseTemplate

alias Absinthe.Phoenix.SubscriptionTest

alias Phoenix.ChannelTest

alias Phoenix.Socket

require Phoenix.ChannelTest

using do

quote do

use AppWeb.ChannelCase

use Absinthe.Phoenix.SubscriptionTest, schema: AppWeb.GraphQl.Schema

import AppWeb.GraphQlHelpers

defp create_socket(params \\ %{}) do

{:ok, socket} = ChannelTest.connect(AppWeb.UserSocket, params)

{:ok, socket} = SubscriptionTest.join_absinthe(socket)

socket

end

defp subscribe(socket, query, options \\ []) do

ref = push_doc(socket, query, options)

assert_reply(ref, :ok, %{subscriptionId: subscription_id})

subscription_id

end

defp close_socket(%Socket{} = socket) do

# Socket will be closed automatically but we can do it manually

# It's useful when we want to test, for example, users with different roles in a loop

Process.unlink(socket.channel_pid)

close(socket)

end

end

end

endTest the subscriptions

Now let's create the tests. I have added some comments that will explain the code.

# test/app_web/graphql/subscriptions/blog/post_subscriptions_test.exs

defmodule AppWeb.GraphQl.Blog.PostSubscriptionsTest do

use AppWeb.SubscriptionCase

alias App.AccountsFixtures

describe "post_created" do

@create_post """

mutation($input: CreatePostInput!) {

createPost(input: $input) {

id

title

}

}

"""

@post_created """

subscription($userId: ID) {

postCreated(userId: $userId) {

id

title

}

}

"""

test "returns post when created" do

socket = create_socket()

# Subscribe to a post creation

subscription_id = subscribe(socket, @post_created)

user = AccountsFixtures.user_fixture()

post_params = %{title: "valid title", user_id: user.id}

# Trigger the subscription by creating a post

gql_post(%{

query: @create_post,

variables: %{"input" => post_params}

})

# Check if something has been pushed to the given subscription

assert_push "subscription:data", %{result: result, subscriptionId: ^subscription_id}

# Pattern match the result

assert %{

data: %{

"postCreated" => %{

"id" => _,

"title" => title

}

}

} = result

assert post_params.title == title

# Ensure that no other pushes were sent

refute_push "subscription:data", %{}

end

test "returns user when created with provided user id" do

socket = create_socket()

user_one = AccountsFixtures.user_fixture()

subscription_id = subscribe(socket, @post_created, variables: %{"userId" => user_one.id})

gql_post(%{

query: @create_post,

variables: %{"input" => %{title: "valid title", user_id: user_one.id}}

})

assert_push "subscription:data", %{result: result, subscriptionId: ^subscription_id}

assert %{

data: %{

"postCreated" => %{

"id" => _,

"title" => "valid title"

}

}

} = result

# Ensure that no other pushes were sent

refute_push "subscription:data", %{}

user_two = AccountsFixtures.user_fixture()

gql_post(%{

query: @create_post,

variables: %{"input" => %{title: "valid title", user_id: user_two.id}}

})

# Ensure that no pushes were sent

refute_push "subscription:data", %{}

end

end

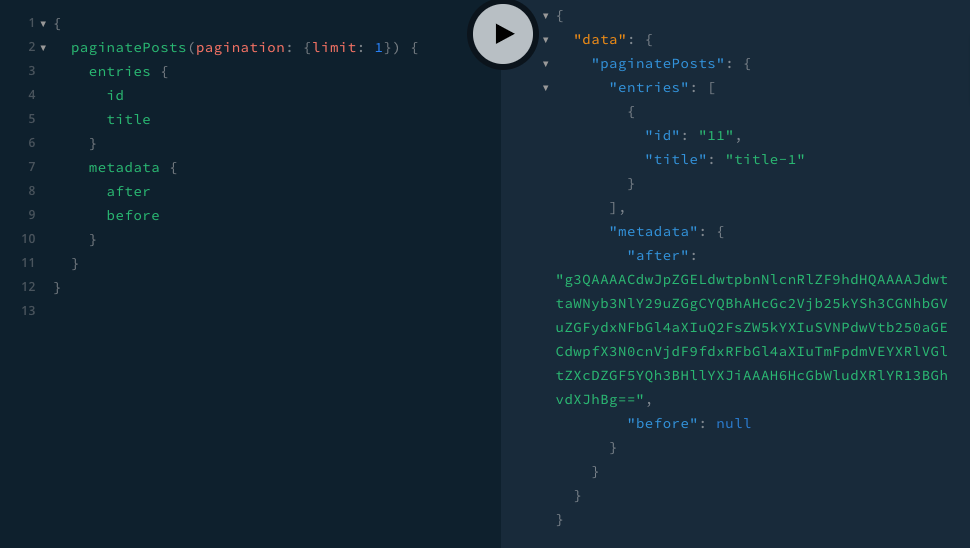

endSimple cursor pagination with Paginator

As we already have the basics covered, it's time to expand the app with new features. The first thing I want to show you is how easy it is to extend GraphQL APIs with Absinthe. So let's implement cursor pagination with Paginator. Firstly, add the library to the dependencies list:

# mix.exs

def deps do

[

...,

{:paginator, "~> 1.2.0"}

]

endUpdate the App.Repo with the Paginator, as we would do with all Phoenix applications.

# lib/app/repo.ex

defmodule App.Repo do

use Ecto.Repo,

otp_app: :app,

adapter: Ecto.Adapters.Postgres

use Paginator

endCreate a new context function that will paginate posts.

# lib/app/blog.ex

defmodule App.Blog do

...

def paginate_posts(pagination \\ %{}) do

repo_pagination =

pagination

|> Enum.into(Keyword.new())

|> Keyword.put_new(:limit, 20)

|> Keyword.put(:cursor_fields, [:inserted_at, :id])

Repo.paginate(Post, repo_pagination)

end

endThe next step is to create types for pagination. I have taken these types from the Paginator's documentation: metadata, and input.

# lib/app_web/graphql/types/pagination_types.ex

defmodule AppWeb.GraphQl.PaginationTypes do

use Absinthe.Schema.Notation

object :pagination_metadata do

field :after, :string

field :before, :string

field :limit, :integer

field :total_count, :integer

field :total_count_cap_exceeded, :boolean

end

input_object :pagination_input do

field :after, :string

field :before, :string

field :limit, :integer

field :include_total_count, :boolean

end

endImport the pagination types in the schema.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

...

import_types AppWeb.GraphQl.PaginationTypes

...

endAdd type for paginating the posts.

# lib/app_web/graphql/types/blog/post_types.ex

defmodule AppWeb.GraphQl.Blog.PostTypes do

use Absinthe.Schema.Notation

object :post_pagination do

field :entries, list_of(:post)

field :metadata, :pagination_metadata

end

endAs with all the queries and mutations add a resolver.

# lib/app_web/graphql/resolvers/blog/post_resolvers.ex

defmodule AppWeb.GraphQl.Blog.PostResolvers do

alias App.Blog

def paginate_posts(args, _resolution) do

pagination = Map.get(args, :pagination, %{})

{:ok, Blog.paginate_posts(pagination)}

end

endAnd at last, create the query.

# lib/app_web/graphql/queries/blog/post_queries.ex

defmodule AppWeb.GraphQl.Blog.PostQueries do

use Absinthe.Schema.Notation

alias AppWeb.GraphQl.Blog.PostResolvers

object :post_queries do

...

field :paginate_posts, :post_pagination do

arg :pagination, non_null(:pagination_input)

resolve &PostResolvers.paginate_posts/2

end

end

endThat's it, the pagination works. As you can see extending the schema with new features is easy and predictable.

Dataloader

Dataloader is a utility that helps to efficiently batch and cache database queries when resolving GraphQL fields that involve fetching related data. It addresses the N+1 problem by consolidating multiple database queries into a single batch query, reducing the number of database round-trips and improving performance. We will replace the batch function with the Dataloader.

# mix.exs

def deps do

[

...,

{:dataloader, "~> 2.0.0"},

]

endCreate a generic data source

The idea is to create different data sources for each way of loading data. Firstly, let's create a generic data source that will just fetch requested data without any filtering, ordering, or anything. To create a data source, we have to define a function that will create a new Ecto Dataloader source. To the source, we have to provide a repo and a query function. The query function has to return a query and will load our data, so if you want to add some filtering, add it here.

# lib/app_web/graphql/schema/basic_data_source.ex

defmodule AppWeb.GraphQl.Schema.BasicDataSource do

def source do

Dataloader.Ecto.new(App.Repo, query: &query/2)

end

def query(queryable, _params) do

queryable

end

endWhat is a context?

Before we continue, we have to learn about the context of the resolution. The context is shared data available during the resolution logic of a GraphQL operation. The context object is typically used in resolver functions or middleware to for example handle authorization. The most common thing that you will find in context is a currently logged-in user, which will be probably used for auth.

Configure the schema to use Dataloader

We have to extend our schema to handle the Dataloader. Absinthe library comes with the Dataloader plugin and middleware. Let's add the plugin with the plugins function. Plugins are modules that run before and after resolution of the entire document. The next thing is that we have to implement the context function that will add the dataloader to the resolution's context. In the function, we have to create a new dataloader and add new sources using the add_sources function, where the second argument is the name of a source and the third is the source.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

alias AppWeb.GraphQl.Schema.BasicDataSource

...

def context(context) do

loader =

Dataloader.new()

|> Dataloader.add_source(:basic, BasicDataSource.source())

Map.put(context, :loader, loader)

end

def plugins do

[Absinthe.Middleware.Dataloader] ++ Absinthe.Plugin.defaults()

end

endCreate a macro to import the helpers

The Absinthe library comes with the Absinthe.Resolution.Helpers module that contains some helpers, e.g., batch, and dataloader. So let's create a macro that will import these for us.

# lib/app_web/graphql/schema/type.ex

defmodule AppWeb.GraphQl.Schema.Type do

defmacro __using__(_opts) do

quote do

use Absinthe.Schema.Notation

import Absinthe.Resolution.Helpers

end

end

endUse the dataloader in the schema

Using the dataloader is very simple, invoke the dataloader helper with a matching source name as a resolver and that's it.

# lib/app_web/graphql/types/blog/post_types.ex

defmodule AppWeb.GraphQl.Blog.PostTypes do

use AppWeb.GraphQl.Schema.Type

object :post do

...

field :user, non_null(:user), resolve: dataloader(:basic)

end

endCreate a custom data source with filtering

Let's create a data source that will only fetch posts with "title-2" as a title. We have to return a query so we will use Ecto.Query for this.

# lib/app/blog/post/post_data_source.ex

defmodule App.Blog.PostDataSource do

import Ecto.Query

alias App.Blog.Post

def source do

Dataloader.Ecto.new(App.Repo, query: &query/2)

end

def query(Post, _params) do

from p in Post, where: p.title == "title-2"

end

# Fallback to default

def query(queryable, _params) do

queryable

end

end

Add the data source, with App.Blog.Post as the name.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

alias App.Blog

...

def context(context) do

loader =

Dataloader.new()

|> Dataloader.add_source(:basic, BasicDataSource.source())

|> Dataloader.add_source(Blog.Post, Blog.PostDataSource.source())

Map.put(context, :loader, loader)

end

endUpdate the user's schema.

# lib/app/accounts/user.ex

schema "users" do

...

has_many :posts, App.Blog.Post

...

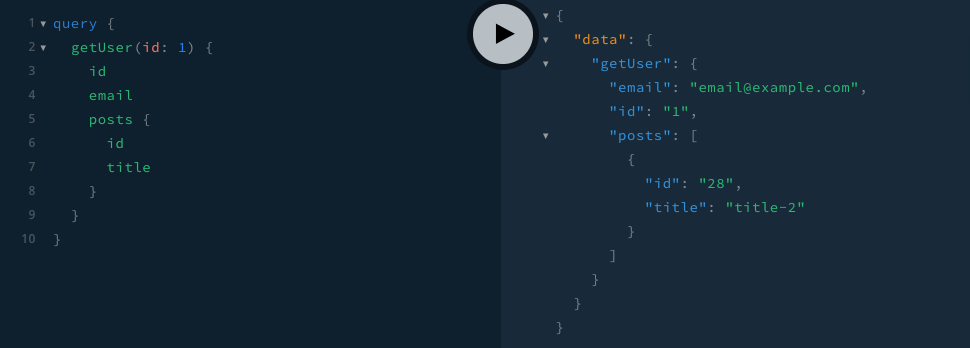

endAnd use the dataloader with a matching name.

# lib/app_web/graphql/types/accounts/user_types.ex

defmodule AppWeb.GraphQl.Accounts.UserTypes do

use AppWeb.GraphQl.Schema.Type

alias App.Blog.Post

object :user do

...

field :posts, list_of(:post), resolve: dataloader(Post)

end

end

Difference

Without batching or the Dataloader, we were fetching a user for each post separately. Which resulted in the N+1 problem as presented below.

[debug] QUERY OK source="posts" db=0.5ms idle=962.5ms

SELECT b0."id", b0."title", b0."user_id", b0."inserted_at", b0."updated_at" FROM "posts" AS b0 []

↳ AppWeb.GraphQl.Blog.PostResolvers.list_posts/2, at: lib/app_web/graphql/resolvers/blog/post_resolvers.ex:18

[debug] QUERY OK source="users" db=0.1ms idle=963.1ms

SELECT a0."id", a0."email", a0."avatar", a0."inserted_at", a0."updated_at" FROM "users" AS a0 WHERE (a0."id" = $1) [1]

↳ anonymous fn/3 in AppWeb.GraphQl.Blog.PostTypes.__absinthe_function__/2, at: lib/app_web/graphql/types/blog/post_types.ex:14

[debug] QUERY OK source="users" db=0.1ms idle=963.3ms

SELECT a0."id", a0."email", a0."avatar", a0."inserted_at", a0."updated_at" FROM "users" AS a0 WHERE (a0."id" = $1) [1]

↳ anonymous fn/3 in AppWeb.GraphQl.Blog.PostTypes.__absinthe_function__/2, at: lib/app_web/graphql/types/blog/post_types.ex:14

[debug] QUERY OK source="users" db=0.1ms idle=963.5ms

SELECT a0."id", a0."email", a0."avatar", a0."inserted_at", a0."updated_at" FROM "users" AS a0 WHERE (a0."id" = $1) [1]

↳ anonymous fn/3 in AppWeb.GraphQl.Blog.PostTypes.__absinthe_function__/2, at: lib/app_web/graphql/types/blog/post_types.ex:14

...With batching or the Dataloader, there are only two SQL queries. One to list posts and one to fetch all needed users.

[debug] QUERY OK source="posts" db=2.6ms idle=217.0ms

SELECT b0."id", b0."title", b0."user_id", b0."inserted_at", b0."updated_at" FROM "posts" AS b0 []

↳ AppWeb.GraphQl.Blog.PostResolvers.list_posts/2, at: lib/app_web/graphql/resolvers/blog/post_resolvers.ex:18

[debug] QUERY OK source="users" db=0.6ms queue=0.5ms idle=222.6ms

SELECT a0."id", a0."email", a0."avatar", a0."inserted_at", a0."updated_at", a0."id" FROM "users" AS a0 WHERE (a0."id" = ANY($1)) [[1, 4, 3, 2]]

↳ Dataloader.Source.Dataloader.Ecto.run_batch/2, at: lib/dataloader/ecto.ex:703Relay

Relay is a set of conventions and specifications for building data-driven apps, developed by Facebook. It provides guidelines and best practices for structuring GraphQL schemas and implementing data fetching patterns in a way that is optimized for performance and usability.

The first thing is to add the required dependency.

# mix.exs

defp deps do

[

{:absinthe_relay, "~> 1.5.0"},

]

endThe next thing is to update our schema to use the Absinthe.Relay.Schema module. The module requires providing :modern (targeting Relay > v1.0) or :classic (targeting Relay < v1.0) option. In this tutorial, I will focus on the modern version.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

use Absinthe.Schema

use Absinthe.Relay.Schema, :modern

...

endAnd let's update our notation macro.

# lib/app_web/graphql/schema/type.ex

defmodule AppWeb.GraphQl.Schema.Type do

defmacro __using__(_opts) do

quote do

use Absinthe.Schema.Notation

use Absinthe.Relay.Schema.Notation, :modern

import Absinthe.Resolution.Helpers

end

end

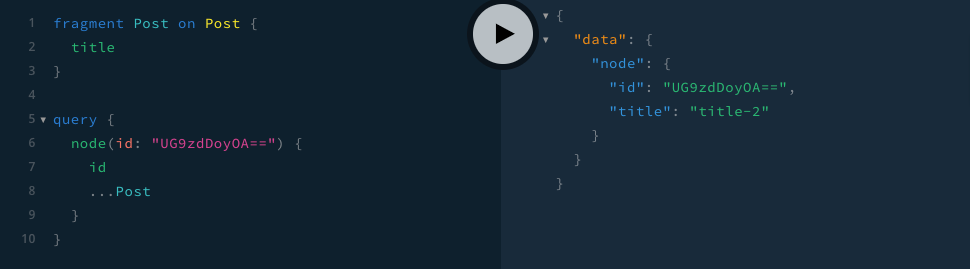

endNodes

Relay defines a Node interface that objects in the schema can implement to ensure they have a globally unique identifier. The Node interface provides a way to fetch individual objects by their global ID, making it easy to reference and fetch objects across different parts of the application.

Define node interface

Creating global IDs by hand will be very troublesome and Absinthe's authors know that, so they implemented the node interface that will automatically assign global IDs to the resources. This interface will pattern-match given resources and construct a global ID, for example, "Post:1".

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

alias App.Blog.Post

alias App.Accounts.User

...

node interface do

resolve_type fn

%Post{}, _ -> :post

%User{}, _ -> :user

_, _ -> nil

end

end

...

endNote: Absinthe allows to create custom global ID translators.

Update types to use the node macro

Now, we have to update all the objects for which we want to use the global ID, so basically, every object that has an ID. To do so we have to provide the node macro as a prefix to the object macro.

# lib/app_web/graphql/types/blog/post_types.ex

# Add node macro before object

node object(:post) do

# Remove id field

# field :id, non_null(:id)

...

end

Create the node field

The node field provides a unified query to fetch an object based on a global ID. To create such a query, we have to create a field with the node macro that is prefixed before the field macro. As you can see, in the resolver, I'm matching the type with the values provided in the node interface.

# lib/app_web/graphql/schema.ex

query do

node field do

resolve fn

%{type: :user, id: id}, _ ->

{:ok, App.Accounts.get_user(id)}

%{type: :post, id: id}, _ ->

{:ok, App.Blog.get_post(id)}

_, _ ->

{:error, :invalid_global_id}

end

end

...

end

Convert global IDs to internal ones

When using global IDs with normal fields the IDs are not converted automatically but Absinthe provides some ways to do it manually. The first one is the ParseIDs middleware. Let's add it to our notation macro.

# lib/app_web/graphql/schema/type.ex

defmodule AppWeb.GraphQl.Schema.Type do

defmacro __using__(_opts) do

quote do

...

alias Absinthe.Relay.Node.ParseIDs

end

end

end

To use the macro, we have to provide a keyword list. You can imagine this keyword list is a path to a given ID with a type at the end, e.g.,

[user_id: :user]- theuser_idfield is a:usertype

field :create_post, type: non_null(:post) do

arg :user_id, non_null(:id)

middleware ParseIDs, user_id: :user

...resolve

end[input: [user_id: :user]]- theuser_idis contained in theinputobject and theuser_idis a:usertype

input_object :post_input do

field :user_id, non_null(:id)

end

field :create_post, type: non_null(:post) do

arg :input, non_null(:post_input)

middleware ParseIDs, input: [user_id: :user]

...resolve

endNote: To provide multiple types use a list

user_id: [:user, :admin]

During testing, the ParseIDs middleware is not useful, so instead use the from_global_id function. Provide a global ID to the first argument and the schema to the second. It is recommended to pattern-match the result to make sure that the given ID is of the correct type.

alias AppWeb.GraphQl.Schema

...

{:ok, %{id: id, type: :user}} = Absinthe.Relay.Node.from_global_id(global_id, Schema)Convert internal IDs to global ones

But what if I want to convert a numerical ID to a global one? Use the to_global_id function. To the first argument provide a type, to the second the id, and the third the schema.

alias AppWeb.GraphQl.Schema

...

user = AccountsFixutures.user_fixture()

global_id = Absinthe.Relay.Node.to_global_id("User", user.id, Schema)Mutations

Relay defines conventions for structuring mutations in a schema, including input types, output types, and payload fields. Mutations follow a standardized pattern that allows clients to update data in the GraphQL server and receive consistent responses.

Update mutations to use Relay's convention

Let's take a look at the anatomy of Relay's mutations:

payloadmacro put it as a prefix to thefieldmacro, and remove the type from the field.inputmacro, instead of using args to define an input, use theinputmacro. It will create a GraphQL type named, e.g., “CreatePostInput". In the resolver we don't have to pattern match on an input key, the arguments will be "unpacked".outputmacro, instead of defining an output in thefieldmacro, use theoutputmacro. It will create a GraphQL type named, e.g., “CreatePostPayload”. We have to update the resolver to return, e.g., post like that{:ok, %{post: post}}instead of{:ok, post}.

# lib/app_web/graphql/mutations/blog/post_mutations.ex

defmodule AppWeb.GraphQl.Blog.PostMutations do

...

object :post_mutations do

# Add `payload` macro before `field`

payload field :create_post do

# Replace `args` with `input` macro

input do

field :title, non_null(:string)

field :user_id, non_null(:id)

end

# Remove `type: :post` from the `field` and add `output` macro inside of the mutation

output do

field :post, non_null(:post)

end

middleware ParseIDs, user_id: :user

resolve &PostResolvers.create_post/2

end

end

endThe Relay convention forces mutations to have a predictable structure, a single required input, and an expected output.

mutation CreatePost($input: CreatePostInput!) {

createPost(input: $input) {

post {

id

title

}

}

}Note: Remember to update subscriptions and tests to handle new output.

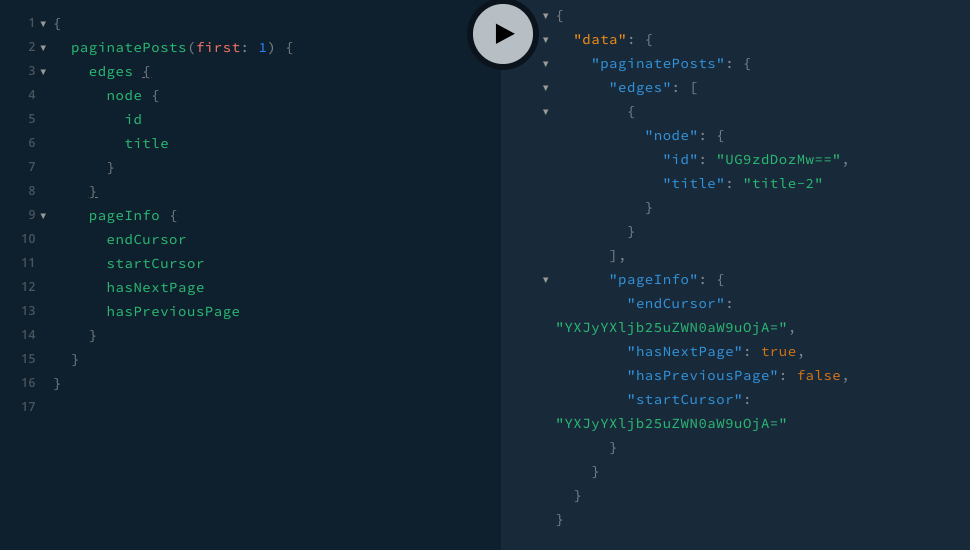

Connections

In Relay, connections are a concept for paginating through lists of data in a schema. The connections provide a standardized and efficient way to navigate through large datasets with cursor-based pagination.

We will replace the Paginator library with the connections. Remove the library and all the code related to it.

Using connections to handle pagination

The first thing is to create a context function that will handle the pagination. The Absinthe Relay library provides us with the from_query function where we provide, a query that will list requested data, repo function, and pagination arguments. The query has to be ordered by something.

# lib/app/blog.ex

defmodule App.Blog do

alias Absinthe.Relay.Connection

...

def paginate_posts(args) do

query = from p in Post, order_by: [desc: p.inserted_at]

Connection.from_query(query, &Repo.all/1, args)

end

endUsing the connection macro let's define the connection type. This will create a connection and edge types for a given node type.

# lib/app_web/graphql/types/blog/post_types.ex

defmodule AppWeb.GraphQl.Blog.PostTypes do

use AppWeb.GraphQl.Schema.Type

connection(node_type: :post)

endCreate a resolver function.

# lib/app_web/graphql/resolvers/blog/post_resolvers.ex

defmodule AppWeb.GraphQl.Blog.PostResolvers do

def paginate_posts(args, _resolution) do

Blog.paginate_posts(args)

end

endAnd add a connection field. Using the connection macro as a prefix to the field macro.

# lib/app_web/graphql/queries/blog/post_queries.ex

defmodule AppWeb.GraphQl.Blog.PostQueries do

use AppWeb.GraphQl.Schema.Type

alias AppWeb.GraphQl.Blog.PostResolvers

object :post_queries do

...

connection field :paginate_posts, node_type: :post do

resolve &PostResolvers.paginate_posts/2

end

end

end

Custom scalars

The Absinthe library allows us to simply create new scalars. We have to use the scalar macro and use the serialize and parse macros inside. The first one will run on the outgoing data and the second one on the incoming data. Let's create a new module for the new scalars:

# lib/app_web/graphql/types/scalar_types.ex

defmodule AppWeb.GraphQl.ScalarTypes do

use AppWeb.GraphQl.Schema.Type

@desc "Scalar's description"

scalar :custom_title, name: "Title" do

serialize fn

nil ->

nil

title ->

"Custom #{title}"

end

parse fn

"Custom " <> title ->

title

title ->

title

end

end

endAnd import types from the module in the schema definition.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

...

import_types AppWeb.GraphQl.ScalarTypes

...

endUpload a file

If you need to upload a file, the Absinthe library has your back because it has built-in support for uploading files with Plug. We will implement the upload with the Waffle library.

Install and configure Waffle

Let's start with installing and configuring the Waffle library. Add new dependencies:

# mix.exs

defp deps do

[

{:waffle, "~> 1.1"},

{:waffle_ecto, "~> 0.0.12"}

]

endConfigure the app's endpoint to expose uploaded data.

# lib/app_web/endpoint.ex

defmodule AppWeb.Endpoint do

...

# Add after first Plug.Static

plug Plug.Static, at: "/uploads", from: Path.expand(~c"./uploads"), gzip: false

...

endIn the tutorial, I will only configure the local storage for the development...

# config/dev.exs

config :waffle,

storage: Waffle.Storage.Local,

root_path: "uploads",

asset_host: "http://localhost:4000"...and test environments.

# config/test.exs

config :waffle,

storage: Waffle.Storage.Local,

root_path: "uploads/tests",

asset_host: "http://localhost"Create Waffle uploader

Use the mix waffle.g image_file command to generate a new uploader and configure the uploader to generically handle image files.

# lib/app_web/uploaders/image_file.ex

defmodule App.Uploaders.ImageFile do

use Waffle.Definition

use Waffle.Ecto.Definition

@versions [:original]

@allowed_extensions ~w(.jpg .jpeg .gif .png .webp)

def validate({file, _}) do

file_extension = file.file_name |> Path.extname() |> String.downcase()

if Enum.member?(@allowed_extensions, file_extension) do

:ok

else

{:error, Gettext.dgettext(AppWeb.Gettext, "errors", "invalid file type")}

end

end

def storage_dir(_version, {_file, _scope}) do

get_root_path() <> "/images"

end

def get_root_path do

Application.get_env(:waffle, :root_path)

end

endAdd the image column to the "posts" table and the image field to the post's schema

# lib/app/accounts/post.ex

alias App.Uploaders.ImageFile

use Waffle.Ecto.Schema

...

schema "posts" do

field :image, ImageFile.Type

end

...

def changeset(post, attrs) do

...

|> cast_attachments(attrs, [:image], allow_urls: true)

endImport upload type in the schema

The Absinthe library has a built-in type to handle file uploads. Let's import the :upload type from the Absinthe.Plug.Types module.

# lib/app_web/graphql/schema.ex

defmodule AppWeb.GraphQl.Schema do

...

# Upload type

import_types Absinthe.Plug.Types

...

endUpdate the types

We want to return a URL to the file, so the return type has to be a string.

# lib/app_web/graphql/types/blog/post_types.ex

defmodule AppWeb.GraphQl.Blog.PostTypes do

object :post do

...

field :image, :string

end

endUpdate the mutation

We have to use the imported :upload type in the input of a mutation.

# lib/app_web/graphql/mutations/blog/post_mutations.ex

defmodule AppWeb.GraphQl.Blog.PostMutations do

payload field :create_post do

input do

...

field :image, :upload

end

...

end

endTest the mutations using cURL

Let's make use of the cURL and test the mutation with the following command.

curl -X POST \

-F query="mutation { createPost(input: {title: \"the title\", user_id: \"VXNlcjox\", image: \"image_png\"}) { post { id image title }}}" \

-F image_png=@image.png \

localhost:4000/api/graphqlThe mutation works but returns an error because the image uploader doesn't return a string with a URL, but instead a map with a filename.

Create a scalar to handle files

We have to create a scalar that will take care of the data that the image uploader returns.

# lib/app_web/graphql/types/scalar_types.ex

defmodule AppWeb.GraphQl.ScalarTypes do

use AppWeb.GraphQl.Schema.Type

alias App.Uploaders.ImageFile

@desc "A URL to a file"

scalar :file, name: "File" do

serialize fn

nil ->

""

%{file_name: file_name} = file ->

ext = file_name |> Path.extname() |> String.downcase()

if Enum.member?(@image_extensions, ext) do

ImageFile.url(file)

else

""

end

end

end

endAnd use the :file scalar in the types.

# lib/app_web/graphql/types/blog/post_types.ex

defmodule AppWeb.GraphQl.Blog.PostTypes do

object :post do

...

field :image, :file

end

endTesting uploads

Testing mutations using cURL every time we want to check if uploads work is nonsensical. So let's create some tests that will do the job for us.

Remove the uploaded file after the tests

The first thing we have to do is to configure the conn test case to delete uploaded files after the tests end. We don't want to flood our machines with unnecessary data.

# test/support/conn_case.ex

defmodule AppWeb.ConnCase do

...

setup tags do

App.DataCase.setup_sandbox(tags)

on_exit(fn ->

# Removing uploaded files

File.rm_rf!("#{File.cwd!()}/#{get_root_path()}")

end)

{:ok, conn: Phoenix.ConnTest.build_conn()}

end

def get_root_path do

Application.get_env(:waffle, :root_path)

end

endDo the upload

We need a file to upload so let's create an empty file in test/support/images/sample-img.png. Uploading files in the tests works nearly the same as in cURL.

- Define input, where the value of the upload is the name of the file in the request body.

- Create a new map with the

Plug.Upload. - Add the map to the body of the request under the same key as defined in the input.

post_params = %{

title: "title-1",

image: "image_png",

user_id: "global_id"

}

image = %Plug.Upload{

content_type: "image/png",

filename: "sample-img.png",

path: "./test/support/images/sample-img.png"

}

gql_post(%{

query: @create_post,

variables: %{"input" => post_params},

image_png: image

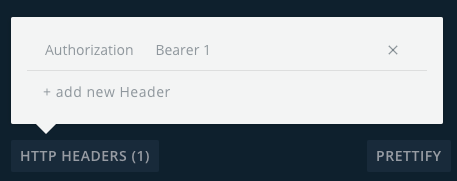

})Basic auth

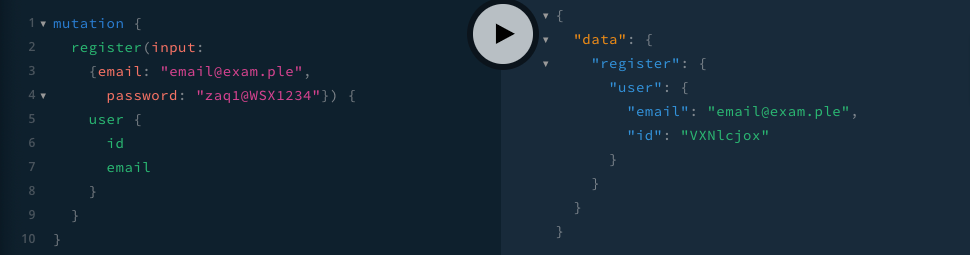

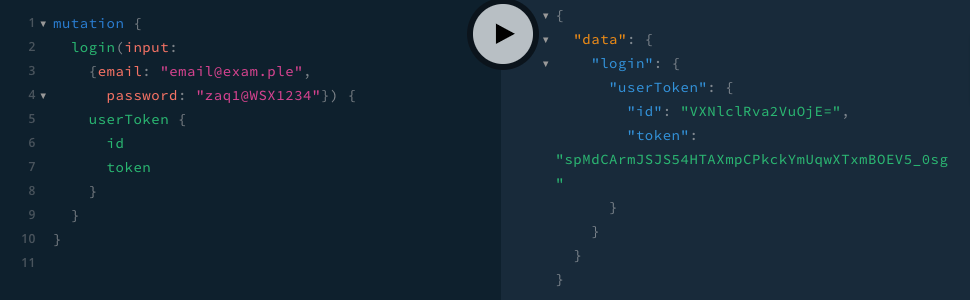

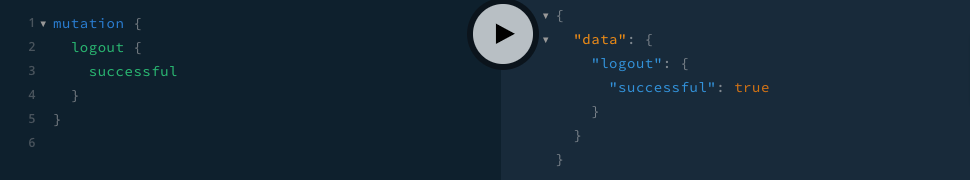

We created a user earlier, but it is still not the time to implement phx.gen.auth. For now, let's use the current infrastructure to demonstrate how to implement a simple authentication flow for our schema.

Create a plug to fetch the current user

When creating the auth for a rest API, we almost always create a plug to fetch the user using a token from a cookie or header. Here we will do the same, but for now, we will use the user's ID as a token. Never do this! Only for demonstration purposes.

Let's create a new plug that will fetch a user and put the user in the context under a current_user key. The assign_context function will help us to assign values into the context.

# lib/app_web/plugs/fetch_user_plug.ex

defmodule AppWeb.FetchUserPlug do

@behaviour Plug

import Plug.Conn

alias App.Accounts

def init(opts), do: opts

def call(conn, _opts) do

with ["Bearer " <> user_id] <- get_req_header(conn, "authorization"),

%Accounts.User{} = user <- Accounts.get_user(user_id) do

Absinthe.Plug.assign_context(conn, :current_user, user)

else

_user_id_missing_or_incorrect -> conn

end

end

endUse the plug in the GraphQL router.

# lib/app_web/graphql/router.ex

defmodule AppWeb.GraphQl.Router do

use Plug.Router

alias AppWeb.FetchUserPlug

plug :match

plug FetchUserPlug

plug :dispatch

...

endFetch a user in the user socket

We probably want to access the user in subscriptions, so let's update the user socket as well.

# lib/app_web/channels/user_socket.ex

defmodule AppWeb.UserSocket do

use Phoenix.Socket

use Absinthe.Phoenix.Socket,

schema: AppWeb.GraphQl.Schema

alias App.Accounts

alias App.Accounts.User

@impl true

def connect(params, socket, _connect_info) do

params = Map.new(params, fn {k, v} -> {String.downcase(k), v} end)

socket =

case current_user(params) do

nil ->

socket

current_user ->

socket

|> assign(:current_user, current_user)

|> Absinthe.Phoenix.Socket.put_options(context: %{current_user: current_user})

end

{:ok, socket}

end

defp current_user(%{"authorization" => "Bearer " <> token}) do

with %User{} = user <- Accounts.get_user(token) do

user

end

end

defp current_user(_), do: nil

@impl true

def id(%Phoenix.Socket{assigns: %{current_user: %{id: id}}}), do: "user:#{id}"

def id(_socket), do: nil

end

Use current user in resolvers

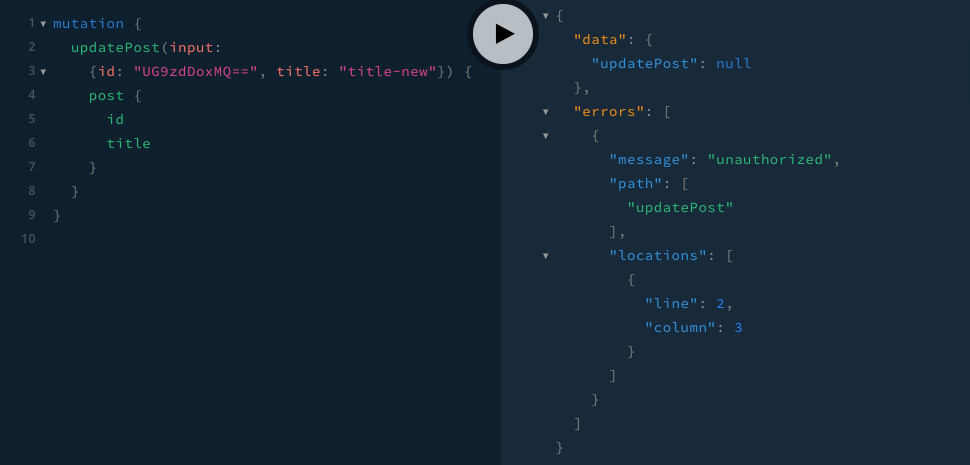

At the start, we were providing the user's ID to the mutations in the input. Let's remove the user_id field from the inputs, and from now on, use the user that we have put into the context. Update resolvers to put the user_id into the args when available and return the "unauthorized" error when not.

# lib/app_web/graphql/resolvers/blog/post_resolvers.ex

defmodule AppWeb.GraphQl.Blog.PostResolvers do

...

def create_post(args, %{context: %{current_user: %{id: user_id}}}) do

args = Map.put(args, :user_id, user_id)

with {:ok, %Post{} = post} <- Blog.create_post(args) do

{:ok, %{post: post}}

end

end

def create_post(_args, _resolution) do

{:error, :unauthorized}

end

end

Add current user query

Now is the time to create a query that will return a user that is currently logged in.

# lib/app_web/graphql/queries/accounts/user_queries.ex

defmodule AppWeb.GraphQl.Accounts.UserQueries do

use AppWeb.GraphQl.Schema.Type

alias AppWeb.GraphQl.Accounts.UserResolvers

object :user_queries do

field :current_user, :user do

resolve &UserResolvers.current_user/2

end

end

endGet the user from the context.

# lib/app_web/graphql/resolvers/accounts/user_resolvers.ex

defmodule AppWeb.GraphQl.Accounts.UserResolvers do

...

def current_user(_args, %{context: %{current_user: user}}) do

{:ok, user}

end

def current_user(_args, _resolution) do

{:error, :unauthorized}

end

endAuthenticate users with a middleware

Instead of pattern-matching every resolver on the context. We can create two useful and simple middleware that will enforce the availability of the user.

Authenticated middleware

To create the Authenticated middleware we only need to check if a user is available in the context. When the user is not available we don't want to run the rest of the operation, we need to use the put_result helper function. The function will change the state of the resolution to :resolved.

# lib/app_web/graphql/middleware/authenticated.ex

defmodule AppWeb.GraphQl.Middleware.Authenticated do

@behaviour Absinthe.Middleware

def call(%{context: %{current_user: %{id: _}}} = resolution, _config) do

resolution

end

def call(resolution, _config) do

Absinthe.Resolution.put_result(resolution, {:error, :unauthorized})

end

endUnauthenticated middleware

The Unauthenticated middleware works the same as the Authenticated one. The only difference is that we don't want a user in the context.

# lib/app_web/graphql/middleware/unauthenticated.ex

defmodule AppWeb.GraphQl.Middleware.Unauthenticated do

@behaviour Absinthe.Middleware

def call(%{context: %{current_user: %{id: _}}} = resolution, _config) do

Absinthe.Resolution.put_result(resolution, {:error, :forbidden})

end

def call(resolution, _config) do

resolution

end

endAdd middleware to authorize a user

The next step to the semi-full auth setup is to authorize a user against a resource.

Load resource middleware

If we want to authorize against a resource we have to load the resource into the context. I have created a middleware that will do that for us. The middleware handles getters that return nil as well as the ones that raise Ecto.NoResultsError. The middleware has 3 configurable options:

getter, a function that will load the resource, which is required.id_path, a path to the ID of the resource in the arguments of an operation, defaults to[:id].resource_key, a key under which the resource will be available. Useful when we want to load multiple resources, defaults to:resource.

# lib/app_web/graphql/middleware/load_resource.ex

defmodule AppWeb.GraphQl.Middleware.LoadResource do

@behaviour Absinthe.Middleware

def call(%{state: :resolved} = resolution, _config), do: resolution

def call(%{arguments: arguments, context: context} = resolution, config) do

getter = config[:getter] || raise ArgumentError, ":getter is required"

id_path = config[:id_path] || [:id]

resource_key = config[:resource_key] || :resource

id = get_in(arguments, id_path)

getter_result =

try do

getter.(id)

rescue

e in Ecto.NoResultsError -> e

end

case getter_result do

nil ->

Absinthe.Resolution.put_result(resolution, {:error, :not_found})

%Ecto.NoResultsError{} ->

Absinthe.Resolution.put_result(resolution, {:error, :not_found})

resource ->

%{

resolution

| context: Map.put(context, resource_key, resource)

}

end

end

def call(resolution, _config), do: resolution

endAuthorize middleware

After we have loaded the resource into the context then we can finally authorize the resource. I have created a middleware that will handle this. The middleware requires the current user and resource to be present in the context. The middleware has 3 configurable options:

resource_key, a key under which a resource will be available. Useful when we want to authorize multiple resources, defaults to:resource.type, a type of authorization, that allows expansion of the defaultauthorizefunction, defaults to:owner.auth_fn, a custom authorization function.

# lib/app_web/graphql/middleware/authorize_resource.ex

defmodule AppWeb.GraphQl.Middleware.AuthorizeResource do

@behaviour Absinthe.Middleware

alias App.Accounts.User

def call(%{state: :resolved} = resolution, _config), do: resolution

def call(%{context: context} = resolution, config) do

resource_key = config[:resource_key] || :resource

auth_type = config[:type] || :owner

auth_fn = config[:auth_fn] || (&authorize/3)

resource = Map.get(context, resource_key)

user = Map.get(context, :current_user)

if run_auth(auth_fn, user, resource, auth_type) do

resolution

else

Absinthe.Resolution.put_result(resolution, {:error, :unauthorized})

end

end

def call(resolution, _config), do: resolution

defp run_auth(auth_fn, user, resource, _type) when is_function(auth_fn, 2) do

auth_fn.(user, resource)

end

defp run_auth(auth_fn, user, resource, type) when is_function(auth_fn, 3) do

auth_fn.(user, resource, type)

end

defp run_auth(_auth_fn, _user, _resource, _type) do

raise "Invalid authorization function"

end

defp authorize(%User{id: user_id}, %{user_id: user_id}, :owner), do: true

defp authorize(%User{id: user_id}, %User{id: user_id}, :owner), do: true

defp authorize(_, _, _), do: false

endNote: Remember to add all of the new middleware to the notation macro.

Usage

Example how to use all of the middleware together.

# Mutation

payload field :update_post do

middleware Authenticated

input do

field :id, non_null(:id)

field :title, non_null(:string)

end

output do

field :post, non_null(:post)

end

middleware ParseIDs, id: :post

middleware LoadResource, getter: &Blog.get_post!/1

middleware AuthorizeResource

resolve &PostResolvers.update_post/2

end

# Resolver

def update_post(args, %{context: %{resource: post}}) do

with {:ok, post} <- Blog.update_post(post, args) do

{:ok, %{post: post}}

end

end

Update GraphQL test helpers to handle authentication